Exploring how accurate segmentation and high-quality data drive the next generation of shadow-free computer vision

Part 1: Recent Advances in Shadow Removal Techniques

Shadow removal refers to the process of generating a shadow-free version of an image that originally contains cast shadows. This task is essential for improving the performance of downstream visual tasks such as object detection, scene parsing, and relighting. Over the past decade, this field has experienced notable progress, moving from handcrafted models to learning-based systems.

1. Physics-Based and Traditional Approaches

Early techniques relied on physical models of light, color constancy assumptions, and manually designed priors. These methods often involved:

- Modeling illumination differences between shadow and non-shadow regions.

- Using shadow matting and color transfer techniques.

- Accepting user inputs to guide the removal process.

While useful for controlled environments, these approaches performed poorly when faced with soft shadows, non-uniform lighting, and complex object boundaries.

2. Deep Learning-Based Methods

The development of convolutional neural networks brought a paradigm shift to the field. Several influential models have pushed the boundaries of what is possible:

- DSC (2017) introduced a dual-branch CNN to separately model structure and appearance information.

- Mask-ShadowGAN (2019) enabled unpaired training using generative adversarial networks and synthetic mask guidance.

- SP+M-Net (2020) used memory mechanisms and structure-preserving attention to refine outputs.

- LG-ShadowNet (2021) combined local and global attention for accurate and artifact-free shadow removal.

These models significantly improved shadow removal quality but required reliable shadow masks to guide learning and inference.

3. Diffusion and Generative Techniques

More recent techniques explore generative frameworks beyond CNNs, including:

- Text-to-shadow-editing with multimodal foundation models.

- Image-conditioned diffusion models for lighting adjustment and relighting control.

- Disentanglement of shadows from content using latent-space manipulation.

Although these approaches offer greater flexibility and controllability, they still depend on prior knowledge of where the shadows exist — highlighting the importance of precise shadow segmentation.

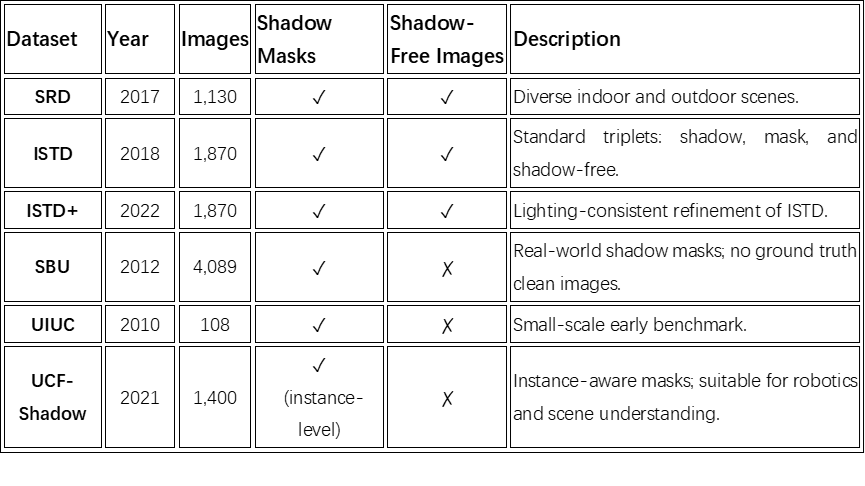

Part 2: Public Datasets for Shadow Segmentation

Training robust shadow removal models requires high-quality datasets containing both shadow masks and, ideally, shadow-free ground truth images. Several benchmark datasets have been widely adopted in both academia and industry.

Each dataset varies in terms of:

- Whether ground truth clean images are available.

- The granularity of the shadow masks (binary, alpha, or instance-level).

- The scene types included (urban, indoor, natural, etc.).

High-quality segmentation datasets are particularly important because most shadow removal models rely on masks for attention guidance, training supervision, and region-specific learning.

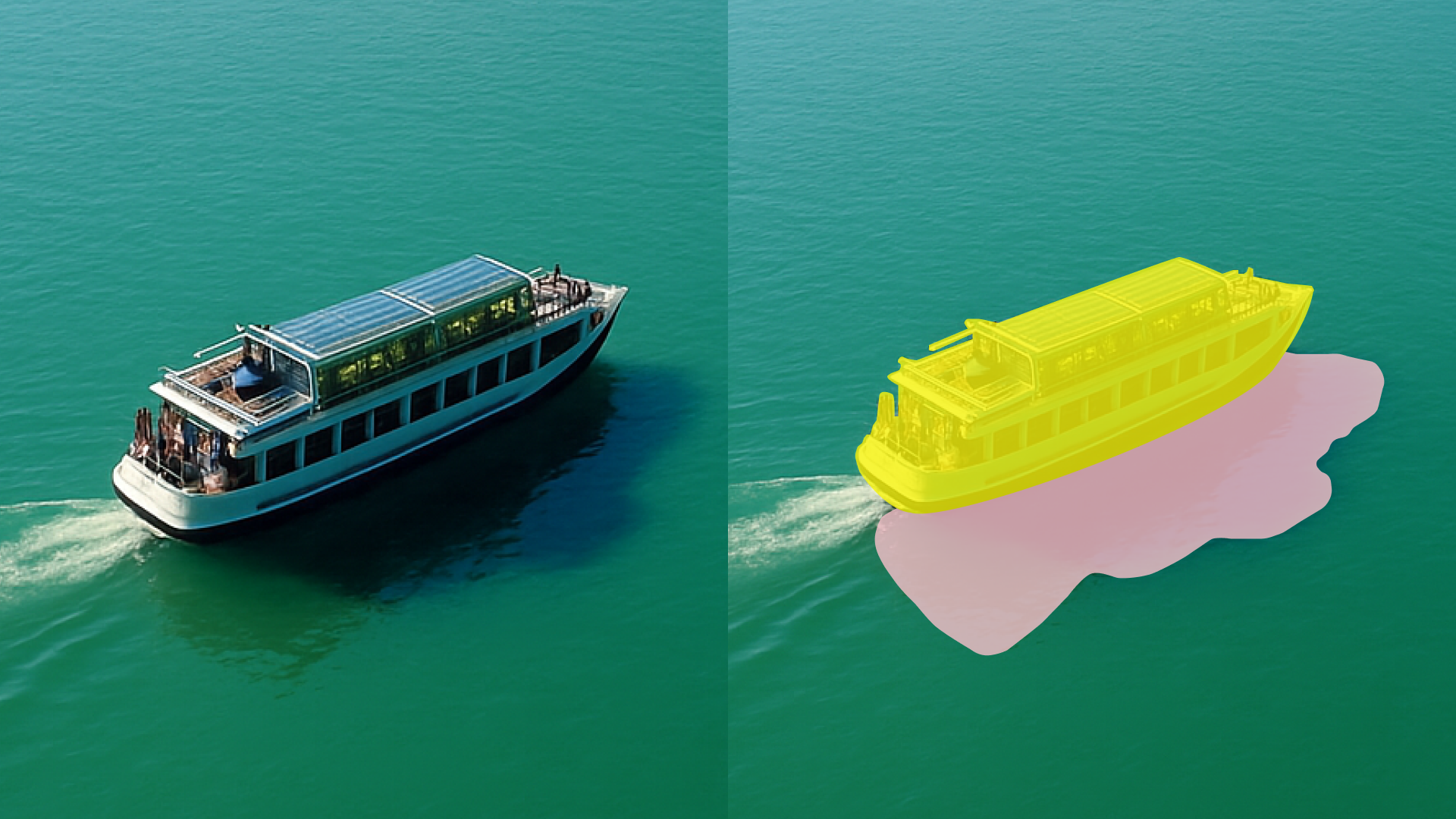

Part 3: Best Practices in Shadow Segmentation Annotation

Accurate shadow segmentation is technically challenging. Shadows often have soft edges, variable transparency, and may blend into similarly colored textures. According to maadaa.ai ‘s Shadow Segmentation Annotation Guide, successful annotation requires careful strategy and tooling.

Key Challenges in Shadow Annotation

- Soft and ambiguous boundaries: Many shadows lack sharp transitions and require gradient labeling rather than binary masks.

- Complex lighting conditions: Multiple light sources and indirect illumination can make shadows appear inconsistent or misleading.

- Visual confusion: Dark-colored regions or surface textures can be mistaken for shadows if contextual clues are ignored.

Recommended Annotation Practices

To improve segmentation quality and training data reliability, the following practices are recommended:

1. Combine Polygon and Brush-Based Annotation Tools

Polygon tools are best suited for well-defined cast shadows, while brush tools allow annotators to trace irregular or soft shadows more naturally.

2. Use Alpha Masks for Soft Shadows

In addition to binary masks, annotators should be able to label transparency using alpha channels to represent the gradual intensity of shadows, especially for translucent objects or indirect shadows.

3. Train Annotators on Scene Context Awareness

High-quality annotation depends on the annotator’s ability to understand:

- The direction and intensity of the light source.

- The object casting the shadow.

- The geometry of the receiving surface.

Training sessions should teach annotators how to distinguish real shadows from surface patterns or dark regions.

4. Implement Quality Assurance Pipelines

Two or more rounds of review are essential. QA pipelines should include:

- Cross-validation with model-generated masks.

- Rule-based checking for common annotation errors.

- Human-in-the-loop review for ambiguous regions.

5. Adopt Scene-Aware Annotation Platforms

Annotation platforms should provide:

- Overlays with different visual modes (e.g., edge maps, brightness-enhanced views).

- Adjustable transparency for mask previews.

- Annotator feedback and correction loops.

These practices not only improve annotation quality but also significantly boost the performance of shadow removal models when used for training and evaluation.

Part 4: Why Data Quality Drives Shadow Removal Progress

Even with powerful network architectures, shadow removal performance is tightly bound to the quality of the training data. In particular, accurate and diverse segmentation annotations have the following impact:

1. Better Localization Enhances Learning Efficiency

Models that receive accurate masks can focus on correcting only shadow-affected regions. This reduces the risk of overcorrecting unaffected areas and helps preserve lighting realism.

2. Diverse Data Improves Generalization

Real-world scenes contain various shadow types: cast shadows, self-shadows, transparent object shadows, and environmental shadows. A diverse dataset helps models generalize to previously unseen conditions.

3. Granular Annotation Enables Advanced Supervision

Different forms of annotation support different model designs:

- Binary masks support classification-style correction.

- Alpha masks allow regression of intensity or softness.

- Instance masks enable object-aware lighting adjustment and relighting.

Conclusion: From Better Masks to Better Models

Shadow removal is no longer a niche research topic. It plays a growing role in fields such as autonomous driving, virtual and augmented reality, satellite imaging, and medical diagnostics. As new models become more general-purpose and generative, the need for high-quality, domain-specific shadow segmentation data becomes even more critical.

To unlock the next level of performance in shadow removal systems, the following steps are essential:

- Curate larger and more diverse datasets across domains.

- Standardize annotation guidelines and quality metrics.

- Encourage collaboration between researchers, data vendors, and tool providers.

Ultimately, better shadow segmentation enables cleaner inputs, smarter learning, and more realistic visual outputs — forming the foundation for truly intelligent vision systems.

References

- Awesome Shadow Removal GitHub

- maadaa.ai: Shadow Segmentation Annotation Best Practices

- Hu et al., “Direction-aware Spatial Context Features for Shadow Detection” (CVPR 2018)

- Wang et al., “Stacked Conditional GANs for Shadow Removal” (CVPR 2018)

- Le and Samaras, “Shadow Removal via Shadow Image Decomposition” (CVPR 2020)

- Liu et al., “ShadowFormer: Shadow Removal via Masked Image Modeling” (arXiv 2022)

- Zhang et al., “LG-ShadowNet: Global-Local Attention Shadow Removal” (ECCV 2020)