-

1. Overview of Panoptic Segmentation

Panoptic segmentation, which involves pixel-level segmentation for both foreground (objects) and background (stuff) categories, is increasingly vital for applications like autonomous driving, healthcare diagnostics, remote sensing, agriculture, and digital imaging. The quality of panoptic segmentation datasets plays a pivotal role in training AI models to deliver accurate, real-world results.

In this article, we will highlight 15 top-tier image segmentation datasets, categorized into open datasets and commercial datasets, to help you elevate your machine learning and AI projects. By choosing high-quality segmentation datasets, you can ensure your models are robust and reliable for various real-world applications.

2. Open Datasets for Panoptic Segmentation

-

1. COCO Panoptic Dataset

The COCO (Common Objects in Context) dataset is one of the most widely recognized and prestigious segmentation datasets in computer vision. It has been adapted to include panoptic segmentation, where both semantic and instance labels are assigned for each pixel. This dataset contains over 118,000 images, divided into training, validation, and test sets, with 80 thing classes and 53 stuff classes. COCO is an excellent choice for diverse real-world applications in object detection, keypoint estimation, and panoptic segmentation.

Link: COCO Panoptic Dataset

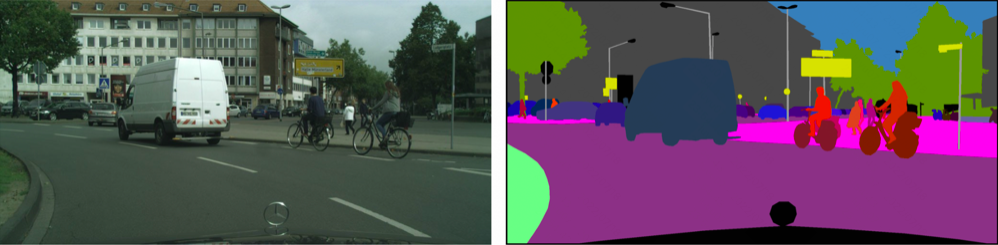

2. Mapillary Vistas Dataset

Mapillary Vistas offers a challenging and large-scale panoptic segmentation dataset containing street-level images from various global regions. With over 25,000 annotated images and 65 categories, it is a prime resource for urban scene understanding, useful for applications like autonomous driving. The dataset includes pixel-accurate and instance-specific annotations, making it ideal for building advanced AI models.

Link: Mapillary Vistas Dataset

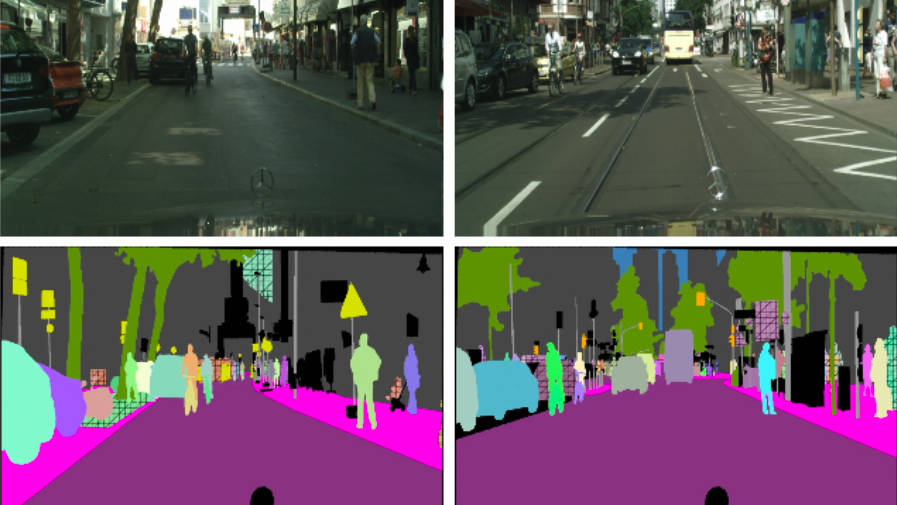

3. Cityscapes Dataset

Focused on urban street scenes, Cityscapes offers fine-grained pixel annotations with 19 object classes, perfect for panoptic segmentation tasks. It is one of the most popular datasets for developing AI for autonomous vehicles, especially in cities, with over 5,000 images, including both instance-level and semantic segmentation annotations.

Link: Cityscapes Dataset

4. ADE20K Dataset

ADE20K provides a rich set of 25,000 images with over 150 categories of objects, perfect for segmentation tasks in real-world environments. It is particularly useful for semantic segmentation and panoptic segmentation research. The dataset includes both things (e.g., cars, furniture) and stuff (e.g., sky, grass), enabling you to train deep learning models on a variety of scenes.

Link: ADE20K Dataset

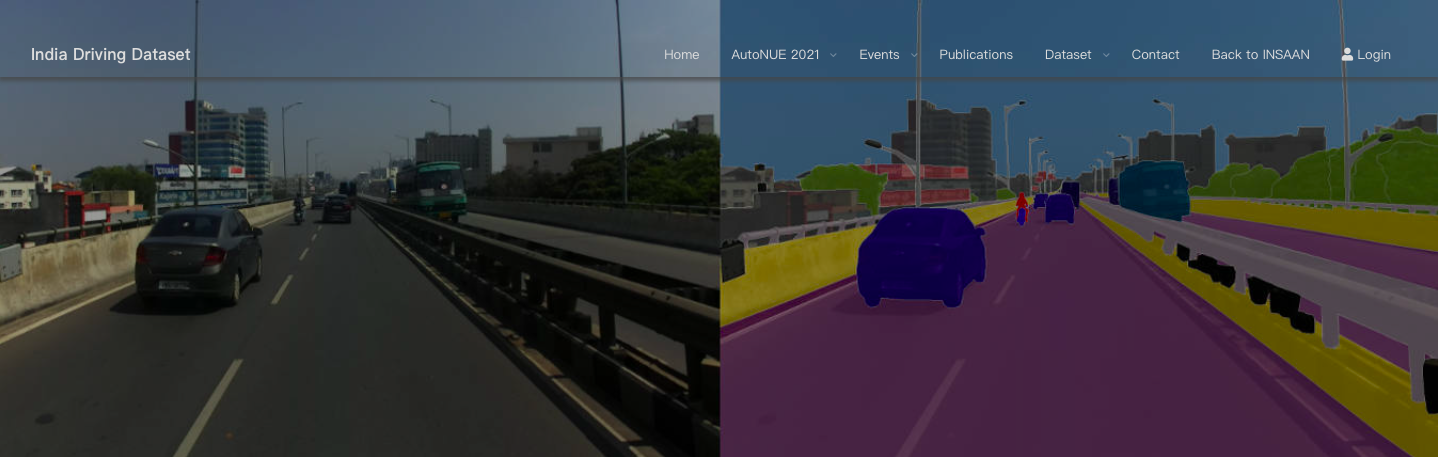

5. Indian Driving Dataset (IDD)

The IDD dataset offers a comprehensive collection of road scenes from Indian cities. It includes annotations for 34 classes and is designed for understanding unstructured road environments. With over 10,000 images, it is tailored for developing models to handle challenging real-world driving scenarios, particularly in non-western, less-defined urban areas.

Link: Indian Driving Dataset

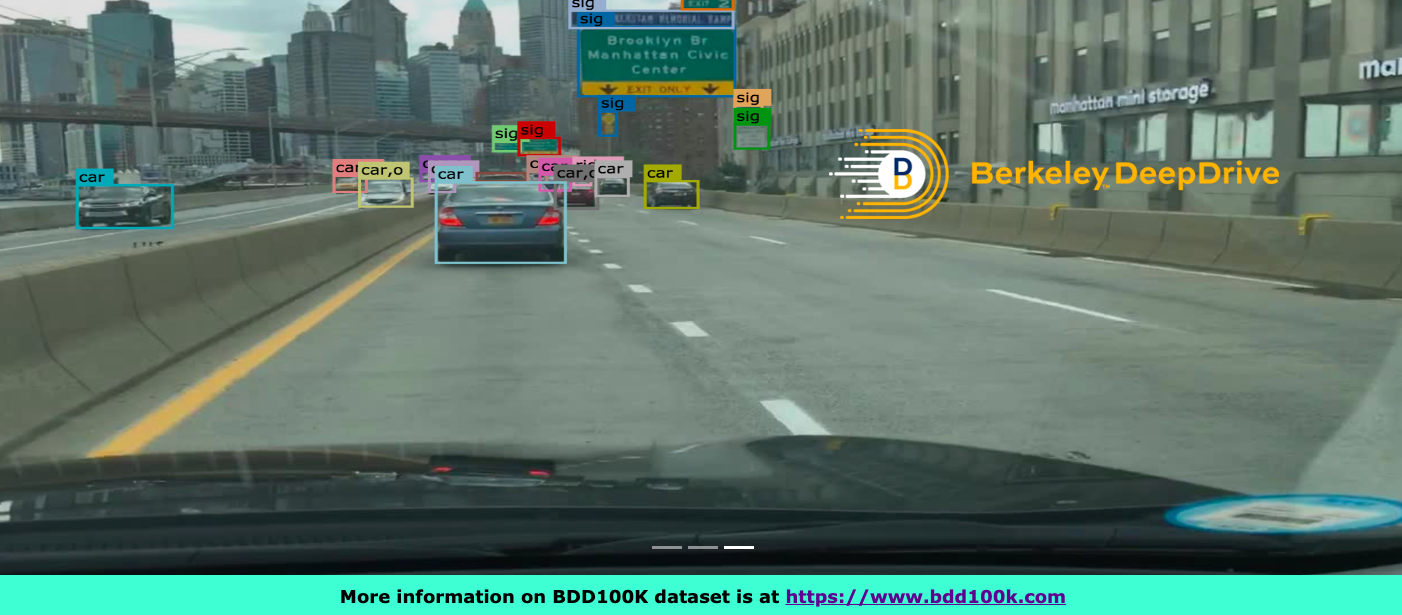

6. BDD100K Panoptic Segmentation

BDD100K is a large-scale driving video dataset, featuring more than 100,000 videos with pixel-wise annotations for panoptic segmentation. With a diverse set of scenes captured across multiple U.S. cities, it is highly valuable for training models for autonomous driving and scene understanding.

Link: BDD100K Panoptic Segmentation

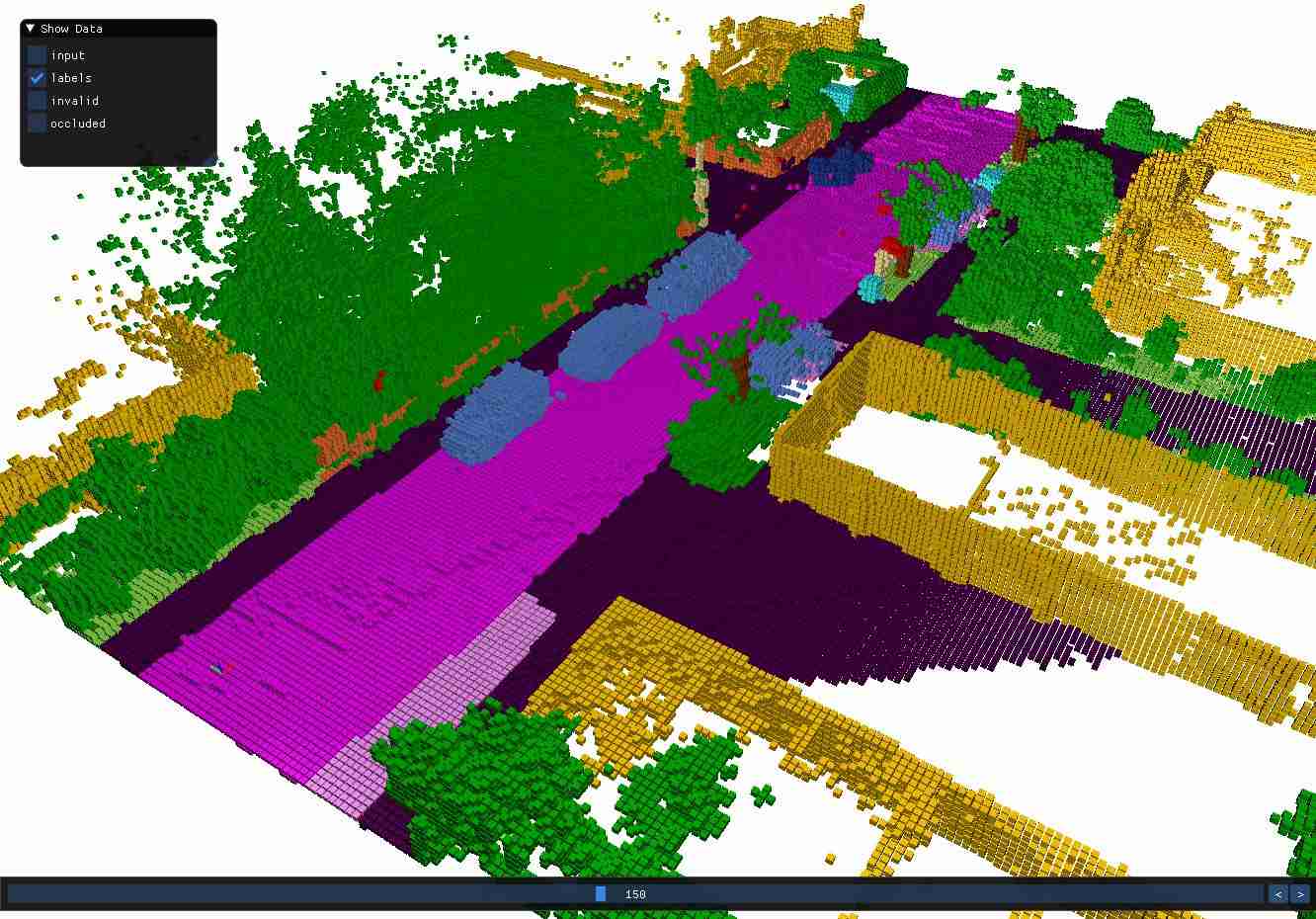

7. SemanticKITTI Panoptic Segmentation

Description: SemanticKITTI is a dataset of lidar sequences of street scenes in Karlsruhe (Germany). It contains 11 driving sequences with panoptic segmentation labels. The labels use 6 thing and 16 stuff categories.

Link: SemanticKITTI Dataset

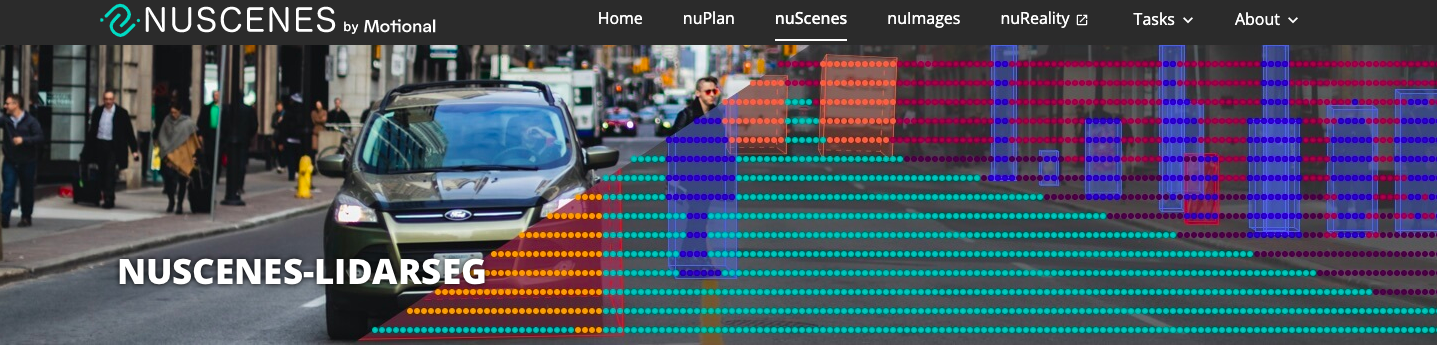

8. nuScenes-lidarseg

Description: nuScenes is a large-scale autonomous driving dataset. It consists of 1000 20s scenes of urban street scenes in Singapore and Boston. The dataset includes point clouds captured by a lidar sensor, as well as synchronized camera data. The nuScenes-lidarseg annotations use 23 thing and 9 stuff classes.

Link: nuScenes-lidarseg Dataset

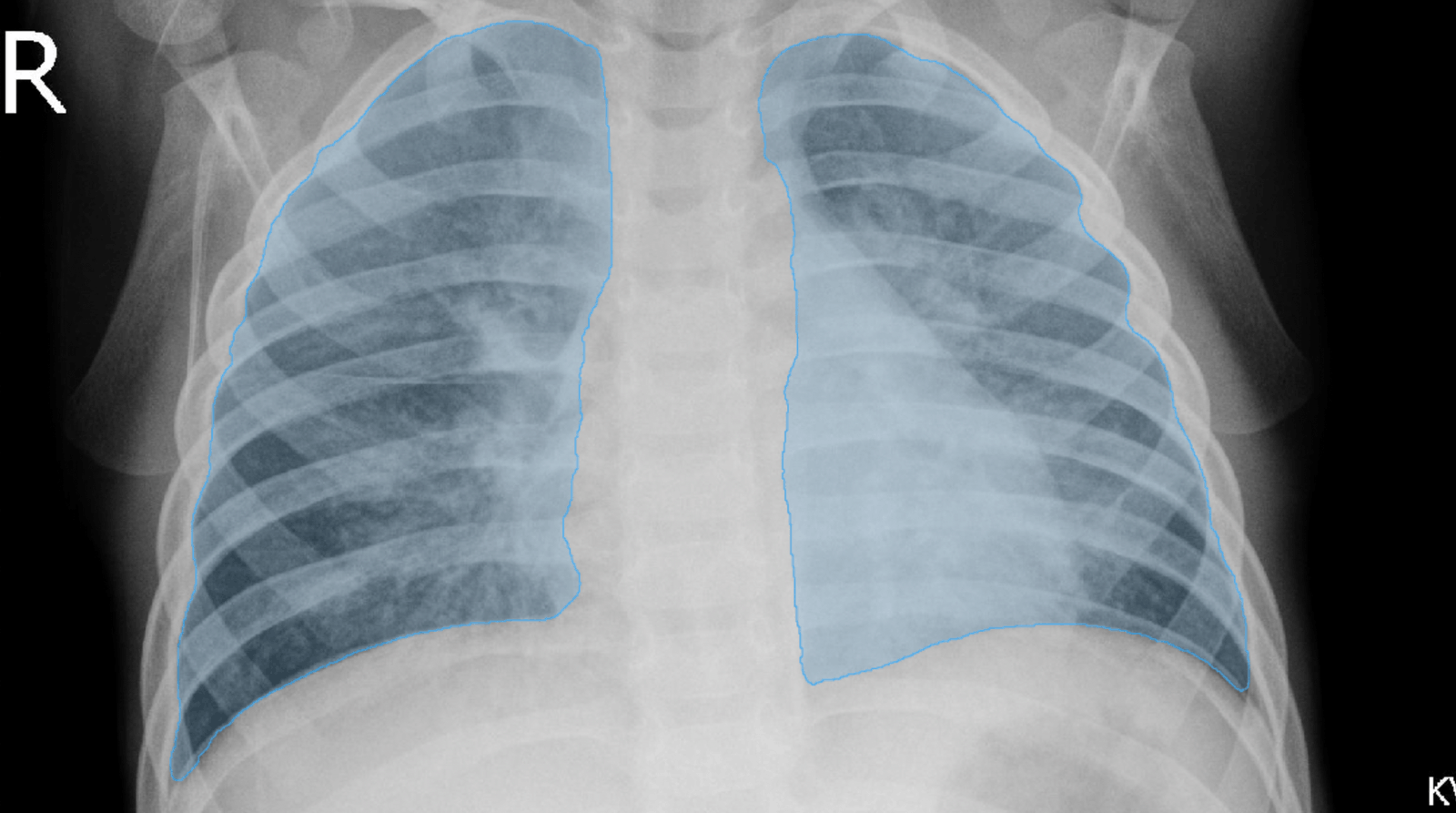

9. COVID-19 X-Ray Dataset (V7)

Description: It is V7’s original dataset containing 6500 images of AP/PA chest X-Rays with pixel-level polygonal lung segmentations. There are 517 cases of COVID-19 amongst these.

Lung annotations are polygons following pixel-level boundaries. You can export them in COCO, VOC, or Darwin JSON formats. Each annotation file contains a URL to the original full-resolution image and a reduced-size thumbnail.

Link: COVID-19 X-Ray Dataset

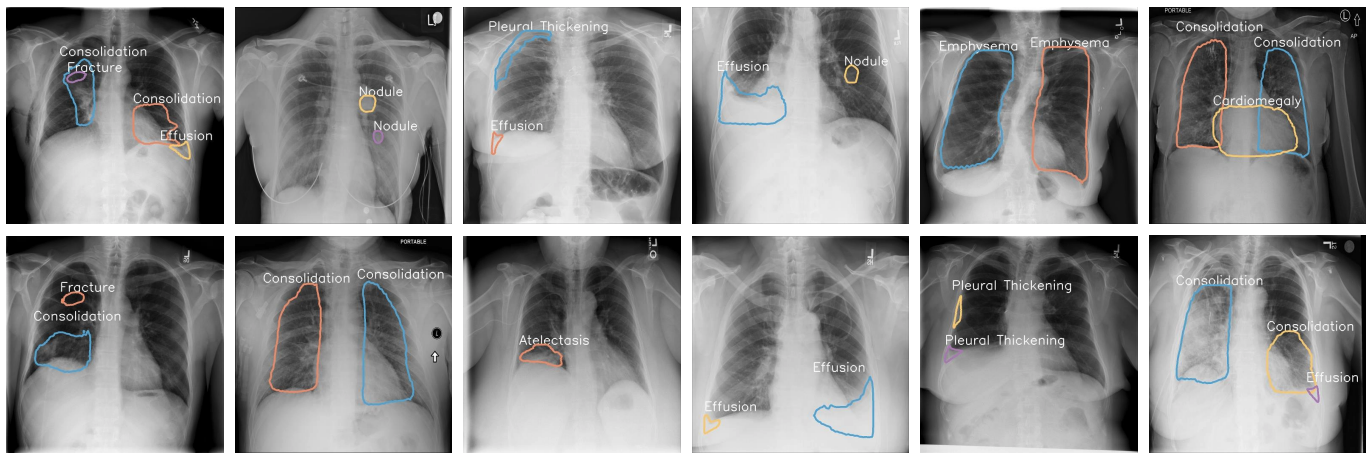

10. NIH Chest X-Ray Dataset

Description: A dataset containing 100,000 chest X-ray images, annotated with diagnostic labels and segmentation masks.

Link: NIH Chest X-Ray Dataset

11. OASIS

Description: The Open Access Series of Imaging Studies (OASIS) is a project aimed at making neuroimaging data sets of the brain freely available to the scientific community.

Link: OASIS Dataset

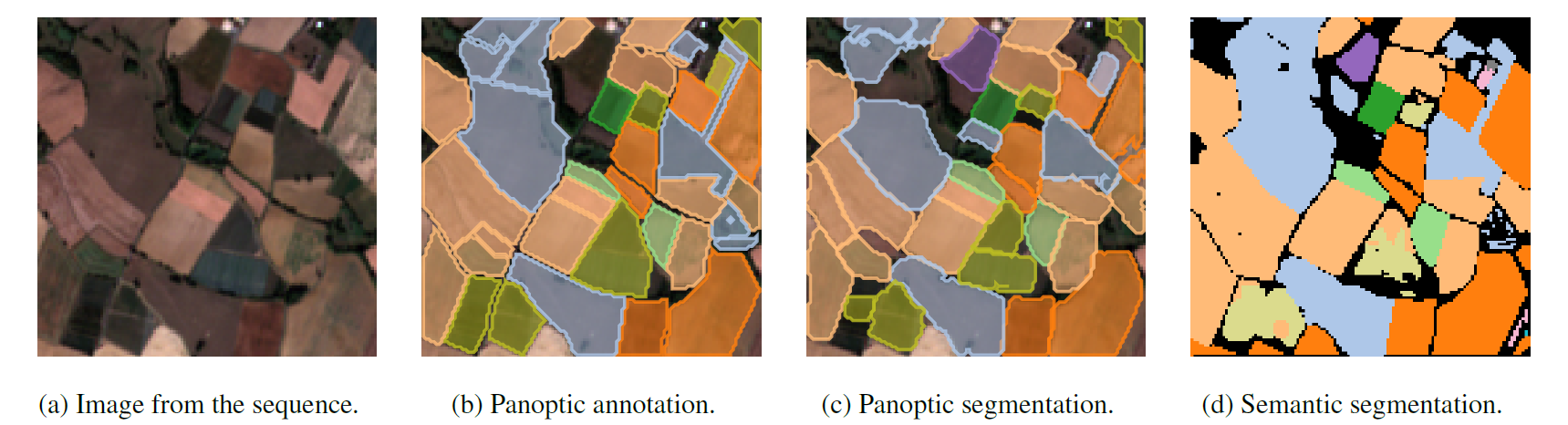

12. Pastis: Panoptic Agricultural Satellite Time Series

Description: Pastis is a dataset of agricultural satellite images. It contains 2,433 variable-length time series of multispectral images. In the images, 18 different kinds of parcels are annotated with their respective crop types.

Link: Pastis Dataset

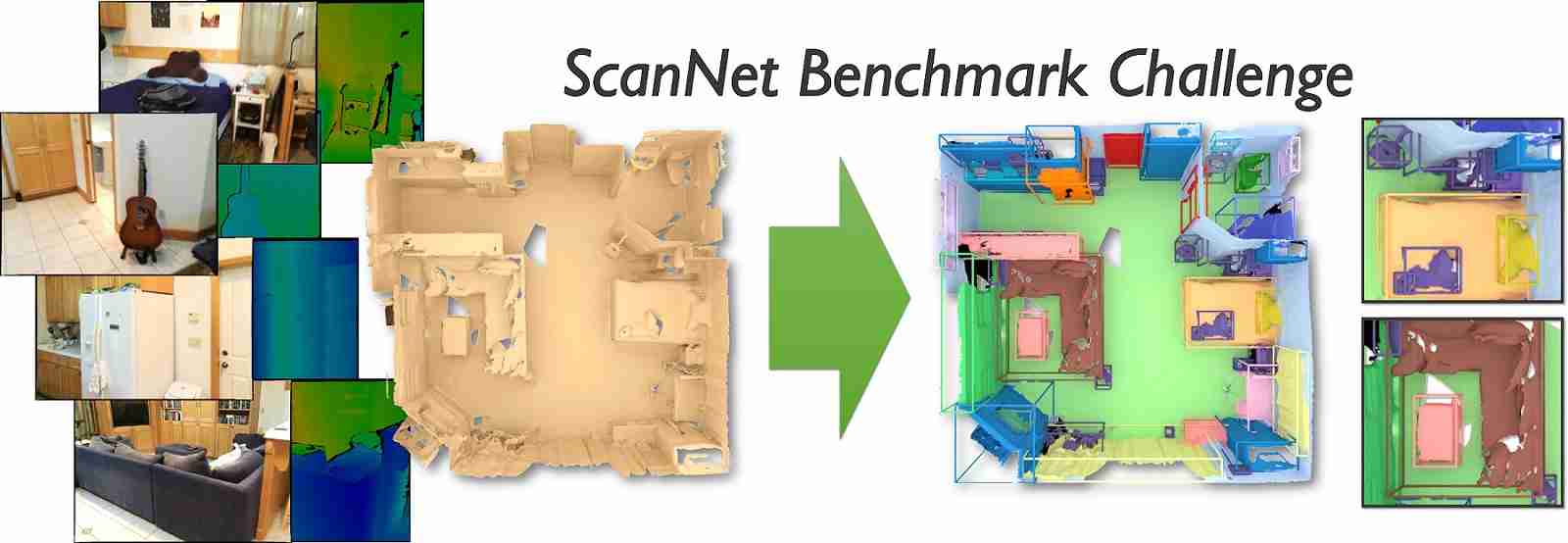

13. ScanNet v2

Description: ScanNet is an RGB-D video dataset of indoor scenes containing 2.5 million views in 1513 scans. It uses 38 thing categories for items and furniture in the rooms and 2 stuff categories (wall and floor). It is not a complete panoptic dataset, as the labels only cover about 90% of all surfaces.

Link: ScanNet Dataset

3. Specialized Commercial Panoptic Segmentation Datasets

Maadaa.ai provides high-quality datasets designed for instance segmentation, semantic segmentation, and panoptic segmentation. Here are two datasets worth exploring:

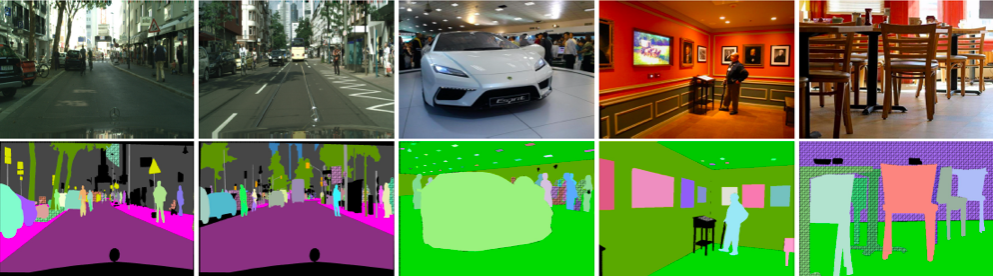

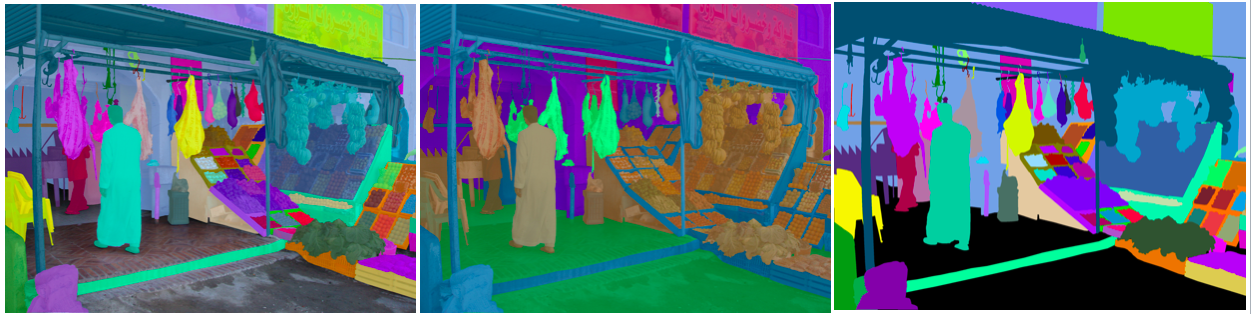

1. Panoptic Scenes Segmentation Dataset (MD-Image-039)

- Key Feature: The "Panoptic Scenes Segmentation Dataset" is a comprehensive resource for the robotics and visual entertainment fields, consisting of a wide range of internet-collected images with resolutions from 660 x 371 to 5472 x 3648 pixels. This dataset is aimed at semantic segmentation, capturing diverse elements such as horizontal and vertical planes, buildings, people, animals, and furniture, offering a holistic view of various scenes.

- Size: 21.3k images.

- Resolution: Varies from 660 x 371 to 5472 x 3648.

2. Human And Multi-object Panoptic Segmentation Dataset (MD-Image-070)

- Key Feature: The "Human And Multi-object Panoptic Segmentation Dataset" is curated for applications in visual entertainment, featuring a wide array of internet-collected images with resolutions exceeding 1280 x 700 pixels. This comprehensive dataset integrates both instance and semantic segmentation to label a diverse range of elements found in everyday life, including natural scenery, people, buildings, and animals, offering a panoptic view of various scenes and subjects.

- Size: 8k images.

4. Future Directions and Key Insights for the Industry

The landscape of panoptic segmentation is continuously evolving. Looking ahead, here are some key trends to watch:

- Increased Dataset Variety: Datasets will diversify even further, incorporating multimodal data like lidar, 3D depth data, and video, enabling more robust models.

- AI-powered Annotation Tools: Datasets will continue to leverage AI to speed up the annotation process and ensure high-quality, consistent labels.

- Expanding Use Cases: Panoptic segmentation will play a growing role in areas like medical imaging (e.g., lung segmentation in X-rays), agriculture (e.g., crop recognition), and surveillance.