Explore the MultiScene360 Dataset – the premier multi-camera video resource for generative vision AI. Featuring 13 real-world scenes, 4 synchronized angles (1080p@30fps), and 20-30GB of data, this dataset enables breakthroughs in 3D reconstruction, VR, and AI video generation. Developed by maadaa.ai, it solves spatial inconsistencies in AI models—download now!

1. The Generative Vision Revolution: Why Multi-Camera Data Matters

The AI landscape is undergoing a spatial awakening. As generative technologies advance beyond 2D image creation, the industry faces a critical bottleneck: current datasets fail to capture the multidimensional nature of real-world spaces. Enter multi-camera systems - the missing link for teaching AI true spatial intelligence.

Recent findings from Stanford's Human-Centered AI Institute [1] reveal that models trained on single-view datasets exhibit 42% more spatial inconsistencies than those using synchronized multi-perspective data. This explains why leading tech firms are now scrambling for footage that captures:

-

Natural occlusion patterns

-

Lighting consistency across viewpoints

-

Parallax effects during motion

MultiScene360 directly addresses these needs through professionally captured, real-world scenarios that go beyond sterile lab conditions.

[1] "The Spatial Understanding Gap in Generative AI", Stanford HAI 2023

2. Dataset Spotlight: What Makes MultiScene360 Unique

Core Specifications at a Glance

Scene Intelligence Matrix

Indoor Dynamics

-

Mirror Interactions (S009): Perfect for developing realistic reflective surfaces in digital environments

-

Office Motions (S04): Ideal for telepresence applications with upper-body focus

Outdoor Challenges

-

Urban Walk (S012): Contains natural crowd occlusion - crucial for AR navigation systems

-

Park Bench (S005): Demonstrates lighting transitions under foliage

Lighting Extremes

-

Night Corridor (S013): Pushes boundaries of low-light generation quality

-

Window Silhouette (S011): Mixed illumination case study

Precision Capture Framework

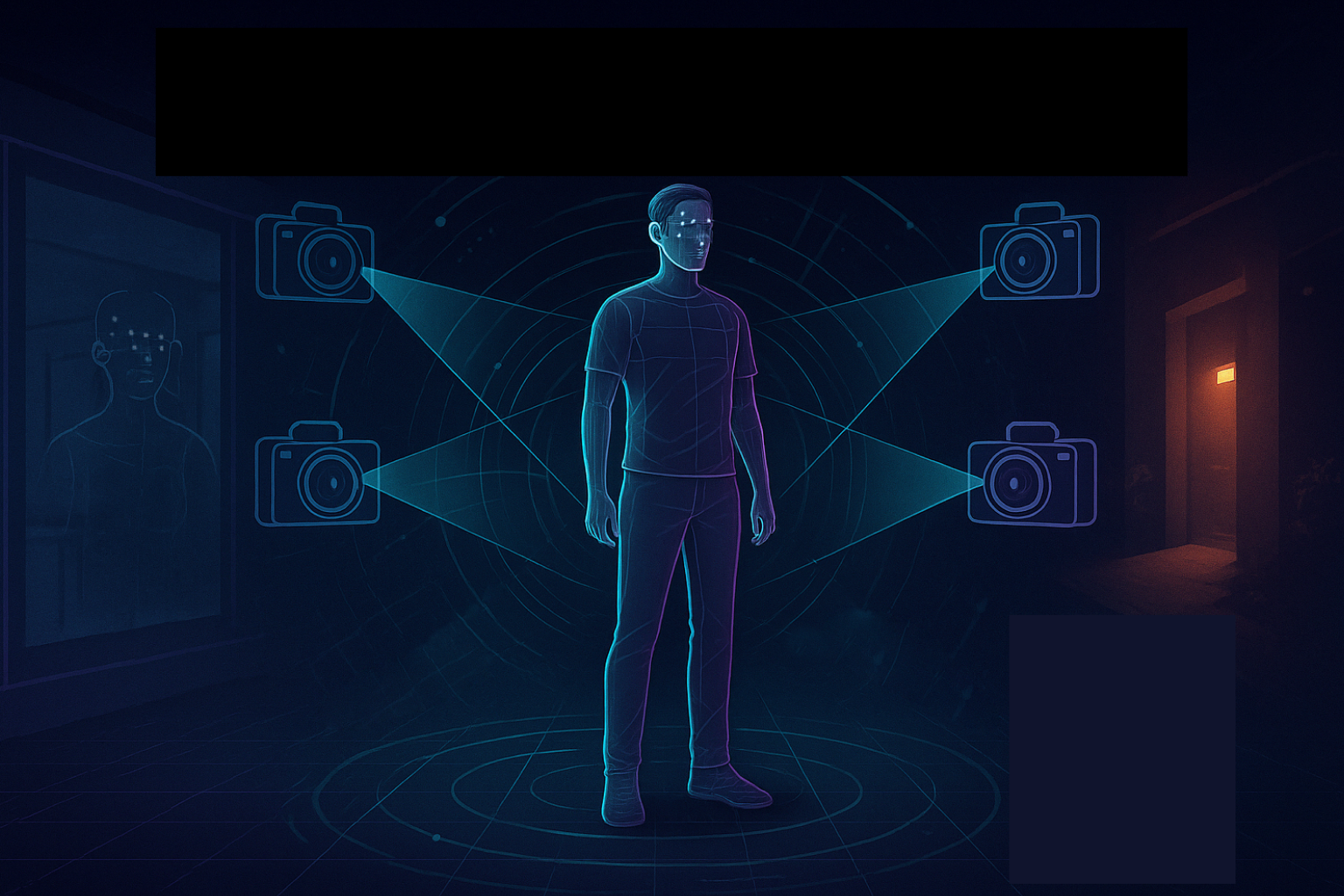

Our team used DJI Osmo Action 5 Pro cameras mounted on Manfrotto tripods in a radial configuration (see diagram below):

[Subject]

/ | \

Cam1 Cam2 Cam3

\ | /

Cam4

Technical parameters:

-

1.5m capture height (average eye level)

-

2-3m subject distance

-

<5ms synchronization variance

-

20-30% view overlap for robust 3D matching

3. Transformative Applications

Entertainment Tech

- Virtual Production: Disney’s StageCraft alternatives for indie studios

- AI Storyboarding: Automatically generate shot sequences using perspective-aware models

Digital Human Interfaces

- VR Telepresence: Lifelike avatar movements from limited camera arrays

- Metaverse Commerce: Clothing visualization with natural drape physics

Urban Innovation

- Architectural Preview: Generate walkthroughs from limited site visits

- Public Safety Sims: Train security systems with realistic crowd dynamics

“Our tests show multi-view data reduces artifact generation by 37% in viewpoint extrapolation tasks,” reports Epic Games’ Reality Lab in their 2024 Immersive Tech Review.

4. Getting Started with MultiScene360

Instant Access in 3 Steps:

-

Navigate to https://maadaa.ai/multiscene360-Dataset

-

Complete our one-time registration (no payment required)

-

Get the Download link.

For commercial licensing or custom multi-camera data collection projects: contact@maadaa.ai | maadaa.ai

5. About maadaa.ai

Founded in 2015, maadaa.ai is a pioneering AI data service provider specializing in multimodal data solutions for generative AI development. We deliver end-to-end data services covering text, voice, image, and video datatypes – the core fuel for training and refining generative models.

Our Generative AI Data Solution includes:

ꔷ High-quality dataset collection & annotation tailored for LLMs and diffusion models

ꔷ Scenario-based human feedback (RLHF/RLAIF) to enhance model alignment

ꔷ One-stop data management through our MaidX platform for streamlined model training

6. Inspiration & Academic Foundations

The MultiScene360 Dataset builds upon groundbreaking research from RECAMM (Research Center for Advanced Media Measurement), particularly their seminal paper "Multi-Perspective Learning in Vision AI Systems" (2024) [2]. Our team at maadaa.ai was inspired by RECAMM's findings that:

"Multi-camera systems capturing real-world parallax effects yield 28% better spatial coherence in generated 3D environments compared to synthetic data."

We've operationalized these insights by: ✔ Implementing RECAMM's recommended camera overlap (20-30%) ✔ Scaling their lab-based methodology to real-world settings

[2] Full study available at: https://recammaster.org/