If you missed out on the first article of this series, please read:

Towards BEV+Transformer based Autonomous Driving — Case Study on Chinese Robotaxi (Part 1)

3. The Respective Technologies Behind Robotaxi Brands

China’s complex road conditions, dense traffic and pedestrian flow in major cities have made the operation of Robotaxi more challenging than abroad.

Apollo Go is Baidu’s autonomous driving ride-hailing service powered by the Apollo platform. Its current 5th generation Robotaxi is equipped with 1 main LIDAR plus 8 millimeter-wave radars, a 10-fold increase in performance over the 4th generation. This is the model of the fully unmanned Robotaxi that Baidu currently offers.

For example, the Baidu Apollo perception algorithm includes pre-processing and post-processing modules. First, the radar and camera are used to capture images, and after a slight adjustment, the traffic lights, vehicles and other information in the images are detected and processed. Finally, the AI system is able to understand the obstacle information of the current road conditions.

In addition, Baidu has proposed the concept of “vehicle-road collaboration”, which relies on road sensors. Therefore, vehicle-road collaboration can collect information and avoid risks through road sensors, while reducing the reliance on radar and compressing costs.

Pony.ai launched the sixth generation of Robotaxi S-AM last year for the first time with a large-scale solid-state radar assembly program, front/rear integration has been installed 1 and 3 solid-state radar, in addition to a number of complementary blindness Lidar, long-range millimeter-wave radar and millimeter-wave radar.

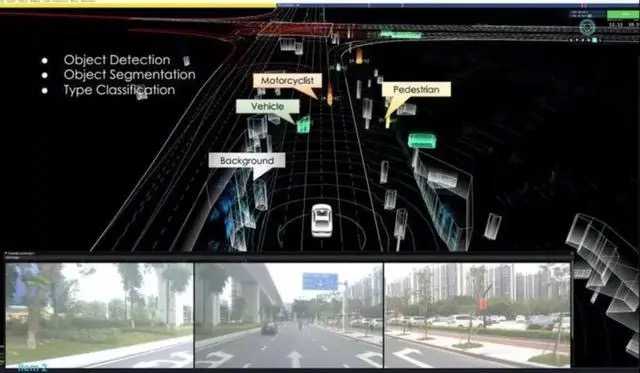

Official information shows that pony.ai perceives road condition information through cameras, radar and other sensors, and carries out target detection, multi-target tracking, scene understanding and other work.

As shown in the image below, the perception system marks vehicles as green, motorcycles as orange, pedestrians as yellow, and environmental information as gray.

In addition, DDT’s Robotaxi, a collaboration with the Volvo XC90, has about 50 sensors throughout its body, including seven lidars, six millimeter-wave radars, 23 cameras, an infrared camera, 12 ultrasonic radars, and a high-precision combined navigation system.

4. Crucial Factors for Robotaxis

Most of Robotaxi’s self-driving technology allows the vehicle to move as close as possible to human thinking by building perception, prediction, planning and control modules. The most critical part is perception.

The perception module for autonomous driving has to deal with seeing objects, analyzing what the object is, tracking the object, performing semantic segmentation, and sorting out whether it’s another car in front of the vehicle, a pedestrian, or an obstacle.

4.1 BEV+Transformer

At present, BEV+Transformer has become one of the mainstream models. The automated driving solution that emphasizes perception and light maps opens a new chapter in the industry.

The combination of the two can fully utilize the spatial information of the environment provided by BEV and Transformer’s ability to model heterogeneous data from multiple sources to achieve more accurate environmental perception, longer-term motion planning, and more comprehensive decision-making.

To learn more about how BEV+Transformer is revolutionizing the autonomous driving industry, please read: BEV+Transformer: Next Generation Autonomous Vehicles and Data Challenges?

In fact, for Robotaxis, it is more than just the combination of BEV+Transformer.

4.2 Corner Cases

In April this year, Waymo’s five Robotaxis stalled on the streets of downtown San Francisco due to dense fog, seriously disrupting traffic.

In addition, the Internet has documented the condition of Waymo’s Robot taxi when it encountered a traffic cop who signaled the car to stop. The robotaxi’s steering wheel did not immediately respond, and the passenger was also unable to get the car to obey the order.

China’s robotaxis have also had minor problems. For example, a passenger posted his riding experience on zhihu.com, saying that when the Apollo Go robotaxi passed through an intersection, it recognized three food stalls and a number of pedestrians on the side of the road as large trucks.

These examples show that what really determines the accuracy of automatic driving is the rich scene data, especially rich corner cases.

Corner cases can be understood as rare road conditions, such as very busy intersections during the evening rush hour; intersections with a large numbers of pedestrians or non-motorized vehicles; intersections with narrow intersections facing left and right crossings of wide lanes; road surfaces under heavy rain, snow, and fog; and also special cases such as sidewalks, backtracking, rollover, and partial disintegration of the vehicle body.

So whoever can collect more data on long-tail scenarios will be able to optimize the algorithm and improve the accuracy of autonomous driving.

In this case, maadaa.ai has released the report “maadaa.ai White Paper | Corner Cases in Auto-driving — The Data Challenges”. maadaa.ai’s global network helps to address these data challenges for safer autonomous driving.

4.3 Fast and Efficient Labeling of Large Amounts of Autonomous Driving Data

When driverless taxis are equipped with so many “eyes” such as radar, cameras, and sensors, as well as a variety of corner cases, there is a huge amount of data that needs to be labeled and fed to the AI efficiently and quickly.

To better help the autonomous driving industry solve such a problem, after years of R&D and testing, the MaidX Auto-4D platform was launched by maadaa.ai. It is a “human-in-the-loop” auto-annotation platform that supports BEV multi-sensor fusion data.

Based on years of experience in the field of automated driving, maadaa.ai has found that it can significantly reduce the cost of manual labeling by 90% or more.

For example, for purely manual data production, one hour of data requires 3,000 to 5,000 man-hours. With MaidX Auto-4D, one hour of data can be reduced to 200 to 500 man-hours.

The “Human-in-the-Loop” data labeling processes include Data Quality Check, Data Cleansing, Automatic Labeling with 95%, Manual Inspection and Correction, and Ground Truth.

The benefits of the MaidX Auto-4D platform are:

1. Auto-Annotation Engine supporting BEV Multi-Sensor Fusion Data.

2. Support data annotation for multi-sensor fusion under BEV.

3. Omni-directional target object attributes (ID, dimension, position, classification, velocity, acceleration, occlusion relationship, visibility, trajectory, lane belonging to, etc.)

4. Forward/backward tracking is used to achieve improved detection accuracy of the current frame using historical and future information.

5. Deep learning models supporting multi-tasking (Segmentation, Object Detection, etc.)

6. Reduces manual labeling costs by 90% or more.

MaidX Auto-4D platform is a one-stop solution for efficient and high-quality annotation of massive and diversified data. While ensuring the quality, it also shortens the annotation time, reduces the annotation cost, and improves the labeling efficiency by 3 to 4 times.

To find out more, please read: BEV+Transformer: Next Generation of Autonomous Vehicles and Data Challenges?

5. Conclusion

In conclusion, the autonomous driving industry, particularly Robotaxis in China, is advancing rapidly with companies such as Baidu, pony.ai, and DDT at the forefront. The importance of perception, especially the combination of BEV+Transformer and corner case data in autonomous driving is evident.

maadaa.ai’s MaidX Auto-4D platform provides a solution for efficiently labeling large amounts of autonomous driving data, significantly reducing the cost of manual labeling.

This case study series provides valuable insights into the current state and future potential of the BEV+Transformer based autonomous driving industry.

Further Reading:

- Towards BEV+Transformer Based Autonomous Driving — Case Study On Baidu-Geely Collaboration (Part 1)

- Towards BEV+Transformer Based Autonomous Driving — Case Study On Baidu-Geely Collaboration (Part 2)

- Towards BEV+Transformer based Autonomous Driving – Case Study on Baidu-Geely Collaboration | Video

- BEV+Transformer: Next Generation of Autonomous Vehicles and Data Challenges?