In this section, we will introduce video-language pre-training methods in recent years. These methods often utilize a transformer as a feature extractor for learning from large-scale multi-modal data. We can roughly divide current methods into two categories: The two-stream method and the Single-stream method.

The single-stream methods often process video and language input in a single transformer model for capturing diverse dependencies between different modalities. And the two-stream methods process video and language individually, and another transformer or simple dot product can obtain final cross-modal attention. Generally, single-stream captures fine-grained relationships between text and video and performs better than two-stream methods. However, two-stream methods have more flexibility than one-stream because they extract different modalities’ features separately.

Single-Stream Methods

Video BERT

VideoBERT[12] is the first to explore Video-Language representation with a transformer-based pre-training method. It follows the single-stream structure, porting the original BERT structure to the multi-modal domain as illustrated in Fig. 17. Specifically, it inputs the combination of video tokens and linguistic sentences into multi-layer transformers, training the model to learn the correlation between video and text by predicting masked tokens. VideoBERT shows the ability of simple transformer structures to learn high-level video representations that capture the semantic meaning and long-range temporal dependencies.

Fig17 VideoBERT

They split the video into short segments of a determined period and cluster the tokens to construct a video dictionary to discretize continuous videos as discrete word tokens. During the pre-training step, the model is trained with MLM, MFM, and VLM proxy tasks, which correspond to feature learning in the text-only, video-only, and video-text domains. Despite the simple proxy tasks and simplistic model structure, VideoBERT performs exceptionally well on the downstream tasks of zero-shot action categorization and video captioning. The model is trained using pre-trained BERT weights, and the video token is created using the S3D backbone. All studies are conducted in the cooking domain, with authors pre-training on a huge scale of cooking videos scraped from YouTube and assessing on the YouCookII benchmark dataset.

HERO

Similarly, HERO[13] is a single-stream video-language pre-training framework. HERO encodes multimodal inputs in a hierarchical structure, where a Cross-modal Transformer captures the local context of a video frame via multimodal fusion, and a Temporal Transformer captures the global video context. The model architecture of HERO is illustrated in Figure 18, which takes the frames of a video clip and the textual tokens of subtitle sentences as inputs. They are fed into a Video Embedder and a Text Embedder to extract initial representations. HERO computes contextualized video embeddings in a hierarchical procedure. First, the local textual context of each visual frame is captured by a Cross-modal Transformer, computing the contextualized multimodal embeddings between a subtitle sentence and its associated visual frames. The encoded frame embeddings of the whole video clip are then fed into Temporal Transformer to learn the global video context and obtain the final contextualized video embeddings.

In addition to standard Masked Language Modeling (MLM) and Masked Frame Modeling (MFM) objectives, we design two new pre-training tasks: (i) Video-Subtitle Matching (VSM), where the model predicts both global and local temporal alignment; and (ii) Frame Order Modeling (FOM), where the model predicts the right order of shuffled video frames. HERO is jointly trained on HowTo100M and large-scale TV datasets to gain a deep understanding of complex social dynamics with multi-character interactions.

ClipBert

ClipBert[14] proposes a generic framework that enables affordable end-to-end learning for video-and-language tasks by employing sparse sampling, where only a single or a few sparsely sampled short clips from a video are used at each training step, as shown in Fig 19, different from previous methods that utilize offline video encoder to extract visual features. ClipBert achieves end-to-end train video encoders by sparse sampling strategy.

DeCEMBERT

The Dense Captions and Entropy Minimization (DeCEMBERT) [15] method is developed to address the issue that automatically produced captions in pre-training datasets such as Howto100M are noisy and occasionally misaligned with video material. DeCEMBERT proposes an enhanced video-and-language pre-training technique that first incorporates automatically generated dense area captions from video frames as auxiliary text input, providing important visual signals for learning better video and language correlations. Second, it integrates an entropy minimization-based limited attention loss to encourage the model to focus automatically on the correct caption from a pool of potential ASR captions in order to address the temporal misalignment issue.

The architecture of DeCEMBERT can be seen in Fig. 20. It takes video representations, dense captions, and ASR captions as input to its transformer layers and learn model parameters via video-text matching and masked language modeling. It is also regularized by a constrained attention loss for learning better alignment between the video clips and the ASR captions.

VATT

VATT [16] is a method for learning multimodal representations from unlabeled data using convolution-free Transformer structures. VATT, in particular, takes raw data as input and generates multimodal representations rich enough to help a range of downstream activities. VATT is trained from the ground up using multimodal contrastive losses, and its performance is measured using downstream tasks such as video action identification, audio event classification, picture classification, and text-to-video retrieval. Furthermore, VATT investigates a modality-independent, single-backbone Transformer by distributing weights among the three modalities. As shown in Fig. 21, VATT linearly projects each modality into a feature vector and feeds it into a Transformer encoder. We define a semantically hierarchical common space to account for the granularity of different modalities and employ Contrastive Noise Estimation (NCE) to train the model.

VIOLET

Unlike previous methods, utilize an offline video feature extractor to obtain video features or sparse sample frames from a video and feed them into 2D CNN. VILOE[17] propose a transformer framework that end-to-end model the temporal dynamics of video. It also proposes a Masked Visual-token Modeling (MVM) for better video modeling.

The framework of VIOLET is shown in Fig 23. Specifically, VIOLET utilizes a video swing transformer(VT) to model video representations. VT enforces spatial-temporal modeling via the 3D-shifted window to compute the initial video representations for video-language alignment. After, a cross-model transformer is used to fusion the text and video features. VIOLET proposes a Visual-token Modeling (MVM) to perform masked visual modeling in a self-reconstruction scenario. The MVM first utilizes the discrete variational autoencoder (dVAE) to quantize video inputs into masked prediction targets. Then MVM masks out some video patches by re-placing the pixel values with all zeros. Then the model is learned to predict the masked video features with the temporal context.

ALPRO

Align-and-Prompt (ALPRO)[18] is a single-stream framework for video-language pretraining. It proposes a Video-Text Contrastive to facilitate multi-modal interaction and Prompt Entity Modeling to learn fine-grained visual-language alignment.

The framework of ALPRO is illustrated in Fig 22. Previous single-stream methods capture cross-modal interactions and model cross-modal relations via a simple transformer encoder layer, ignoring the misalignment of video and text features. To this end, ALPRO presents a video-text contrastive (VTC) loss to align features from the unimodal encoders before sending them into the multimodal encoder. Then, to learn fine-grained visual-language alignments, ALPRO proposes prompt entity modeling (PEM) that improves the models’ capabilities in capturing local and regional information and strengthening the cross-modal alignment between video regions and textual entities. PEM contains a prompter module that generates soft pseudo-labels identifying entities that appear in random video crops. The pretraining model is then asked to predict the entity categories in the video crop, given the pseudo-label as the target.

Two-Stream Methods

CBT

CBT [19] proposes contrastive noise estimation (NCE) as the loss target for Video-Language learning, which maintains fine-grained video information better than vector quantization (VQ) and softmax loss in VideoBERT. As illustrated in Fig. 21, the model consists of three components: one text transformer (BERT) to embed discrete text features, one visual transformer to embed continuous video features, and a third cross-modal transformer to embed mutual information between two modalities. CBT expands the BERT structure to a multi-stream structure and validates the NCE loss for learning cross-modal characteristics.

Two single-modal transformers learn video and text representations using contrastive learning in the pre-training stage. The final cross-modal transformer integrates the two modal sequences, computes their similarity score, and uses NCE loss to learn the association between the paired video and phrase. Both pre-trained visual and cross-modal features are assessed on downstream tasks such as action recognition, action anticipation, video captioning, and video segmentation.

UniVL

Unified Video and Language pre-training [20] is proposed to model for both multi-modal understanding and generation. It comprises four components: two single-modal encoders, a cross encoder, and a decoder with the Transformer backbone. Five objectives, including video-text joint, conditioned masked language model (CMLM), conditioned masked frame model (CMFM), video-text alignment, and language reconstruction, are designed to train each component.

The framework of UniVL is illustrated in Fig 22. The main structure of UniVL comprises four components, including two single-modal encoders, a cross-encoder, and a decoder. The model is flexible for many text and downstream video tasks. The picture also present the potential down-stream tasks, e.g. text-to-video retrieval, video caption, action recognition and multi-modal classification.

Frozen in Time

Frozen-in-Time(FiT)[21] aims to learn joint multi-modal embedding to enable effective text-to-video retrieval. It first proposes an end-to-end trainable model designed to take advantage of large-scale image and video captioning datasets. The model is an adaptation and extension of the recent ViT and Timesformer architectures and consists of attention to both space and time. FiT also propose a large-scale video-text pretrain dataset, i.e., Web2Vid-2m, that contains 2m video-text pairs data collected from the internet.

The model architecture is illustrated in Fig23. It takes both image data and video data to pretrain the video-language models. The dual encoding model consists of a visual encoder for images and video and a text encoder for captions. Unlike 2D or 3D CNNs, the space-time transformer encoder allows us to train flexibly on both images and videos with captions jointly by treating an image as a single-frame video.

CLIP-ViP

The pre-trained image-text models, like CLIP, have demonstrated the strong power of vision-language representation learned from a large scale of web-collected image-text data. CLIP-ViP proposes to per-train the CLIP model on video-language data to further extend the visual-language alignment to the video level.

The framework of CLIP-ViP, consists of a text encoder and a vision encoder. The CLIP-ViP first proposes a Video Proxy Mechanism to learn the frame aggregation and temporality when transferring to the video domain. Meanwhile, to keep the high generality and extendability of ViT backbone, CLIP-ViP aims to propose a simple but effective way to transfer the CLIP model to the video domain under minimal modification. Specifically, as shown in Fig 24, CLIP-ViP performs attention over video proxies with all frames patch features to aggregate video features instead of pooling. It studies joint Cross-Modal learning on multi-source data to learn rich video-language alignment from video subtitle pairs and reduce domain gap with downstream data by corresponding auxiliary frame-caption pairs. It utilizes varied contrastive loss on multiple data to obtain the multi-modal feature, e.g., video sequences and single frame v.s. Subtitle and caption.

Reference List:

[1] ImageNet: crowdsourcing, benchmarking & other cool things

[2] Bert: Pre-training of deep bidirectional transformers for language understanding

[3] Attention Is All You Need

[4] ActBERT: ActBERT: Learning Global-Local Video-Text Representations

[5] The Kinetics Human Action Video Dataset

[6] AVA: A Video Dataset of Spatio-temporally Localized Atomic Visual Actions

[7] Dense-Captioning Events in Videos

[8] Towards Automatic Learning of Procedures from Web Instructional Videos

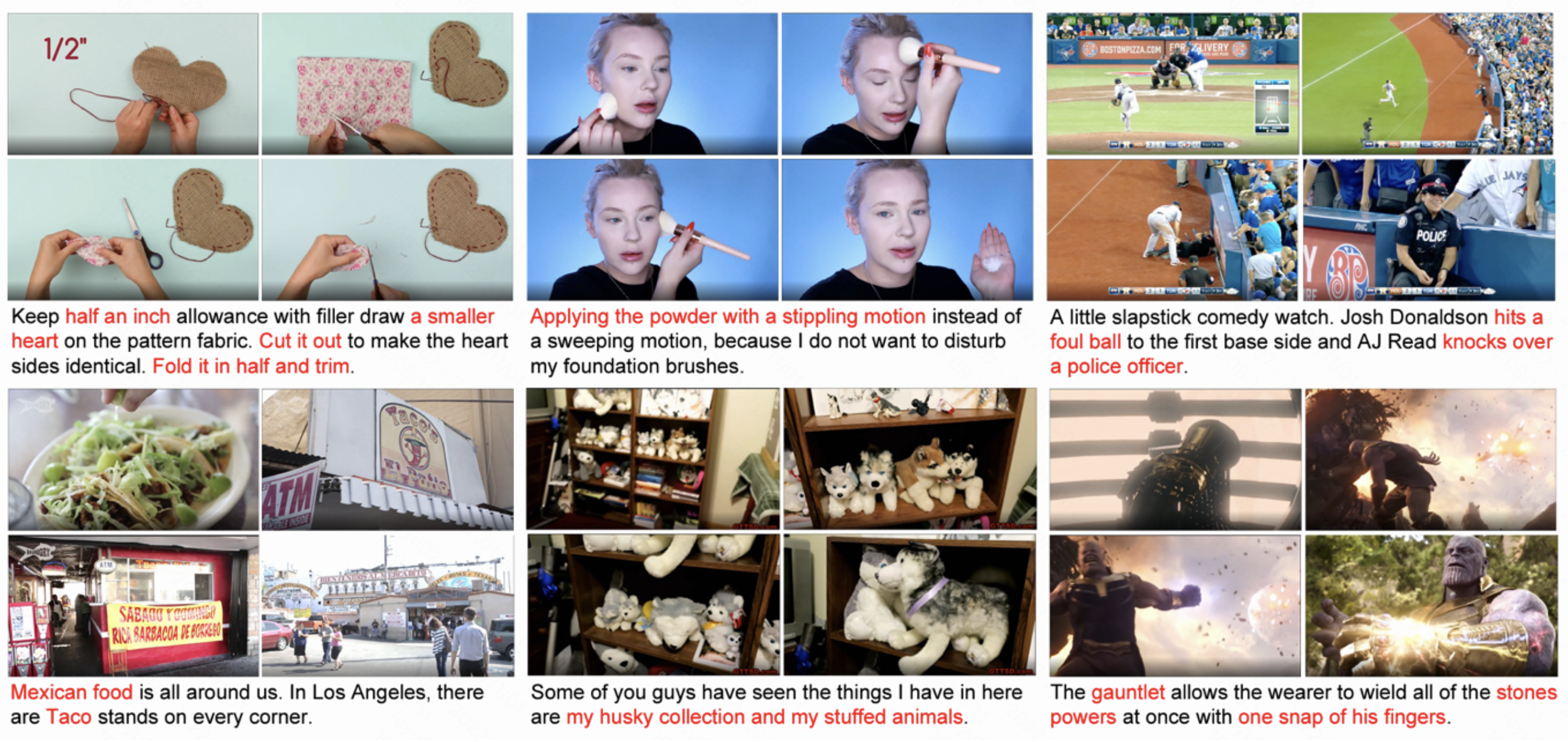

[9] HowTo100M: Learning a Text-Video Embedding by Watching Hundred Million Narrated Video Clips

[10] Frozen in Time: A Joint Video and Image Encoder for End-to-End Retrieval

[11] Advancing High-Resolution Video-Language Representation with Large-Scale Video Transcriptions

[12] VideoBERT: A Joint Model for Video and Language Representation Learning

[13] HERO: Hierarchical Encoder for Video+Language Omni-representation Pre-training

[14] Less is More: CLIPBERT for Video-and-Language Learning via Sparse Sampling

[15] DeCEMBERT: Learning from Noisy Instructional Videos via Dense Captions and Entropy Minimization

[16] VATT: Transformers for Multimodal Self-Supervised Learning from Raw Video, Audio and Text

[17] End-to-End Video-Language Transformers with Masked Visual-token Modeling

[18] Align and Prompt: Video-and-Language Pre-training with Entity Prompts

[19] LEARNING VIDEO REPRESENTATIONS USING CONTRASTIVE BIDIRECTIONAL TRANSFORMER

[20] UniVL: A Unified Video and Language Pre-Training Model for Multimodal Understanding and Generation

[21] Frozen in Time: A Joint Video and Image Encoder for End-to-End Retrieval

[22] CLIP-ViP: Adapting Pre-trained Image-Text Model to Video-Language Representation Alignment