(maadaa AI News Weekly: June 11 ~ June 17, 2024)

1. Luma Labs unveils ‘Dream Machine’

News:

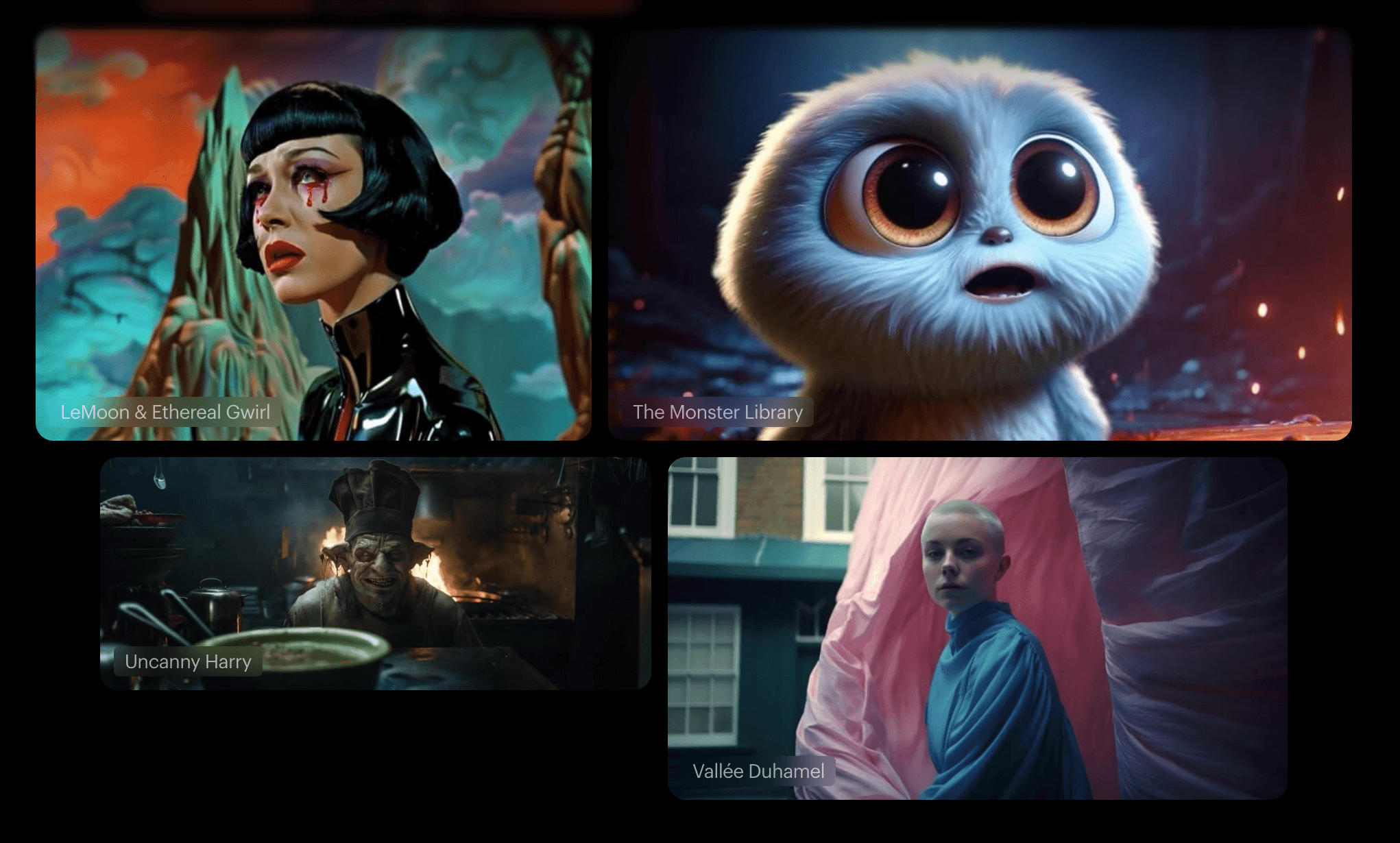

Luma AI has unveiled Dream Machine, a groundbreaking text-to-video AI tool that generates high-quality, realistic videos from text and image prompts, rivaling exclusive models like OpenAI’s Sora and Kling AI. Dream Machine is publicly available and free to use, making advanced video generation accessible to everyone.

Key Points:

- Dream Machine understands physics, motion, and character consistency, enabling accurate rendering of interactions.

- It offers diverse cinematic camera angles and fast video generation (120 frames per 120 seconds).

- Dream Machine is now publicly available, giving Luma AI a first-mover advantage over rivals like OpenAI’s Sora.

- While impressive, it has limitations like minor inaccuracies and long queue times due to high demand.

Why It Matters?

The public release of Dream Machine is a game-changer, as it allows anyone to generate vast amounts of realistic video data on demand from text descriptions. This democratization of video generation opens up new possibilities for rapidly expanding and diversifying training datasets for computer vision and video understanding models. Creating labeled video data at scale can accelerate progress in areas like action recognition, scene understanding, and video analysis, driving innovation across various industries.

https://lumalabs.ai/dream-machine

2. AI Turns Angry Callers into Calm Conversationalists

News:

SoftBank has developed an AI-driven voice conversion technology called “EmotionCanceling” that transforms angry customer voices into calm tones during phone calls. This aims to alleviate stress on call center staff subjected to verbal abuse from irate callers.

Key Points:

- The AI uses voice processing to identify angry callers and extract key features of their speech.

- It then integrates acoustic characteristics of a non-threatening voice to produce a natural, calm tone while retaining some anger for context.

- The system was trained on over 10,000 voice samples from actors performing various emotional phrases.

- It can also warn operators and terminate abusive calls if the conversation becomes too long or aggressive.

Why It Matters?

This technology enhances training datasets by providing a novel approach to collecting and processing voice data for emotion recognition and conversion tasks. The use of professional actors to record a diverse range of emotional phrases in a controlled setting offers high-quality, labeled data for training AI models. Additionally, the ability to extract and manipulate specific acoustic features related to anger and calmness can contribute to more robust and nuanced voice conversion models.

3. AI Coaches for Healthier Living: Personalizing Wellness with Wearable Data

News:

Google researchers have developed AI models that can provide personalized health and wellness insights from sensor data collected by wearable devices. These models analyze signals like heart rate, sleep patterns, and physical activity to offer tailored guidance on improving overall well-being.

Key Points:

- The AI models leverage large datasets of sensor data and self-reported information from health studies.

- They can detect patterns and correlations between physiological signals and health conditions or behaviors.

- The models provide personalized feedback, such as recommendations for better sleep habits or exercise routines.

- Privacy and data security are prioritized, with techniques like federated learning and differential privacy.

Why It Matters?

This research underscores the importance of diverse, high-quality training data for AI systems in health and wellness. Large datasets enable models to learn patterns and provide personalized insights. Privacy-preserving techniques allow for the use of sensitive health data while maintaining robust data protection. These efforts enhance AI training datasets for healthcare applications.

4. Additional News:

1. After obtaining the technology that powers the Artifact app, Yahoo is preparing to introduce its own personalized news app driven by AI technology.

2. Microsoft won’t release the AI feature “Recall” with new computers next week due to privacy concerns. It will preview the feature with a smaller group later.

3. AI Alex, backed by entrepreneur Alex Johnson, is an independent U.S. Congress candidate. If elected, Johnson will represent AI Alex, using its data-driven policies for decisions.

4. Stanford AI Lab recently unveiled HumanPlus, a groundbreaking system that empowers humanoid robots to independently learn and execute tasks by mimicking human actions.

5. Microsoft discontinued its custom GPT builder for Copilot Pro, deleting user data on July 10, but retains it for commercial and enterprise customers.

6. Researchers from the University of Tokyo have published a new study on using robots to control autonomous vehicles, mimicking human structure, flexibility, and senses.

5. maadaa.ai Shared Open Datasets & Commercial Datasets

#1 Open Dataset: InternVid

InternVid is a large-scale video-text dataset designed for multimodal understanding and generation. It contains over 7 million videos, yielding 234 million video clips with detailed descriptions totaling approximately 4.1 billion words. This dataset supports tasks like video-text representation learning, video generation, and video retrieval.

https://arxiv.org/abs/2307.06942

#2 Open Dataset: WebVid-10M

WebVid-10M is a large-scale dataset with 10.7 million short videos with paired textual descriptions. It is widely used for training text-to-video generation models and evaluating their performance.

https://github.com/m-bain/webvid

#3 Commercial Dataset: Characters Relationship Segmentation Dataset

The “Characters Relationship Segmentation Dataset” is designed for the robotics and visual entertainment industries, featuring a wide range of internet-collected images with resolutions spanning from 1280 × 720 to 4608 × 3456. This unique dataset focuses on the relationships between humans and between humans and objects, providing valuable insights into interaction dynamics.

The volume is about 162.1k.

https://maadaa.ai/datasets/DatasetsDetail/Characters-Relationship-Segmentation-Dataset

#4 Commercial Dataset: Chinese Natural Language Emotion Classification Dataset

The volume is about 1500k.

Data Collection: Online/offline collection.

A large amount of dialogue data is used to classify the emotions in the conversation, which are divided into 11 sub-categories: smile, doubt, sadness, fear, anger, surprise, sorry, shyness, comfort, calm, and helplessness.

Application Scenarios: Internet; Mobile

Source:

4. https://www.theverge.com/2024/6/13/24177980/yahoo-news-app-launch-artifact-ai-architecture

newsletter&utm_medium=newsletter&utm_campaign=musk-in-trouble