1. ChatGPT was exposed for leaking training data by repeating certain words

A recent joint publication by Google DeepMind and other researchers has revealed that ChatGPT is leaking training data through a "repeat word" attack.

ChatGPT can be made to regurgitate snippets of text memorized from its training data when asked to repeat a single word over and over again, according to research published by computer scientists.

"Using only $200 worth of queries to ChatGPT (GPT3.5- turbo), we can extract over 10,000 unique verbatim memorized training examples," the researchers said in a paper uploaded to the preprint server arXiv on Nov. 28.

In response to rising concerns about data breaches, OpenAI added a feature that turns off chat history, protecting sensitive data. However, such data is retained for 30 days before being permanently deleted.

Example attack documented: chat.openai.com/share/456d092b-fb4e-4979-bea1-76d8d904031f

Paper address: arxiv.org/abs/2311.17035

2. Amazon's AI Assistant Q: An Innovative Tool for Business Data Queries

Amazon's cloud business, AWS, has launched a new chat tool, Amazon Q. This tool, acting as an AI assistant, allows businesses to ask specific questions related to their company's data. Unveiled by AWS CEO Adam Selipsky at AWS re:Invent, Amazon Q is designed to streamline business operations. For instance, employees can use the tool to ask about the company's latest logo usage guidelines or to understand a colleague's code for app maintenance. This eliminates the need to sift through numerous documents, as Q can surface the required information promptly.

3. Meta Unveils Seamless, an Advanced Real-Time Translation System

Meta has launched Seamless, a cutting-edge real-time translation system. This innovative system comprises two models, SeamlessExpressive and SeamlessStreaming, both designed to deliver state-of-the-art results. SeamlessExpressive leaps expressive phonology research, addressing unexplored aspects of prosody like speech rate and rhythmic pauses, while preserving the emotion and style of the speaker. At present, it supports speech-to-speech translation between six languages - English, Spanish, German, French, Italian, and Chinese. On the other hand, SeamlessStreaming, with a latency of less than two seconds, supports automatic speech recognition and speech-to-text translation in nearly 100 languages. It also provides speech-to-speech translation in nearly 100 input and 36 output languages. Built on the base model, SeamlessM4T v2, released by Meta in August, all these models have been open-sourced. This move allows researchers globally to build upon them and further explore the technology.

Open source website: github.com/facebookresearch/seamless_communication

Demo website: seamless.metademolab.com/expressive

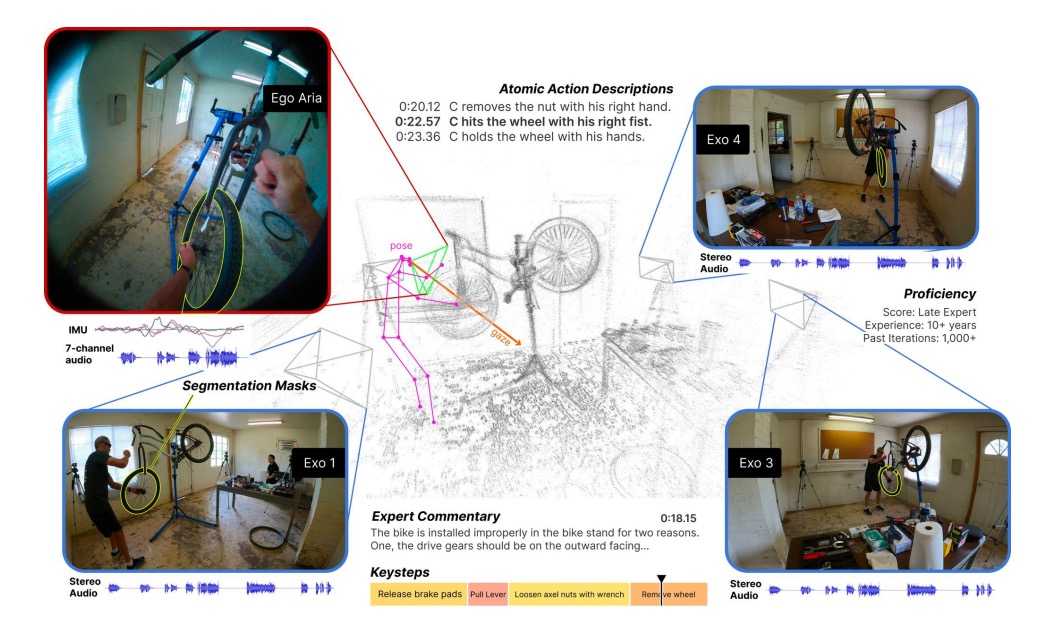

4. Meta Releases Ego-Exo4D Multimodal Dataset

Meta recently released Ego-Exo4D, a fundamental dataset and benchmark suite to support research in video learning and multimodal perception. Described as the result of two years of research by Meta's FAIR (Fundamental Artificial Intelligence Research), the Aria project, and 15 university partners, the core of Ego-Exo4D is the simultaneous capture of a first-person (egocentric) view from a participant's camera-worn headset and multiple third-person (non-egocentric) views from surrounding cameras. The two perspectives complement each other, with the egocentric view revealing the participant's audiovisual perceptions and the non-egocentric view revealing the surrounding scene and context. The researchers will open-source the data (including more than 1,400 hours of video) and annotations for the new benchmark task this month.

The paper address: ego-exo4d-data.org/paper/ego-exo4d.pdf

The project homepage: ego-exo4d-data.org

5. Nvidia Expands Autonomous Driving Team with Chinese Talent

Semiconductor leader Nvidia, known for its significant contributions to autonomous vehicle technology, is ramping up its AV initiatives by recruiting talent from China. The company announced on its official WeChat account that it is hiring for two dozen positions in Beijing, Shanghai, and Shenzhen. These positions span the software, end-to-end platform, system integration, mapping, and product areas of an autonomous driving team. The team will be led by Xinzhou Wu, who is known for implementing intelligent driving features in mass-market vehicles in China in recent years. With this strategic move, Nvidia aims to leverage local expertise to further its progress in the autonomous driving industry.