KEYWORDS: ElonMusk, FSD, Tesla, SelfDrivingCar, AutonomousVehicle, DriverlessCar

Last week, Elon Musk did a 45-minute livestream of his personal Tesla Model S with Tesla Full Self-Driving (FSD) V12 on the streets of California.

While his Tesla nearly ran a red light during the live stream, Musk said that the quality of the data is very important and that large amounts of mediocre data do not improve driving.

According to teslaoracle.com, there is not a single line of code used to build this non-beta version of the autonomous vehicle. [1]

Furthermore, according to Danny Shapiro, vice president of automotive at NVIDIA, “The more diverse and unbiased data we have, the more robust and safer the algorithms that make up AI systems like deep neural networks become.”[2]

Therefore, in this article, we will discuss the following topics:

- What is a self-driving car?

- What does Level 0 to Level 5 mean?

- maadaa.ai’s experiences in self-driving cars

1. What is a self-driving car?

A self-driving car (sometimes called an Autonomous Vehicle or driverless car) is a vehicle that uses a combination of sensors, cameras, radar, and artificial intelligence (AI) to drive between destinations without a human operator while avoiding road hazards and responding to traffic conditions.

Reaching the standard of fully autonomous driving means that no human passenger is required to control the vehicle or be in the car at any time. Self-driving cars can go anywhere a conventional car can go and do everything an experienced human driver can do.

The definition of “self-driving car” from techtarget.com is “a vehicle that uses a combination of sensors, cameras, radar and artificial intelligence (AI) to travel between destinations without a human operator. [3]

2. What does Level 0 to Level 5 mean?

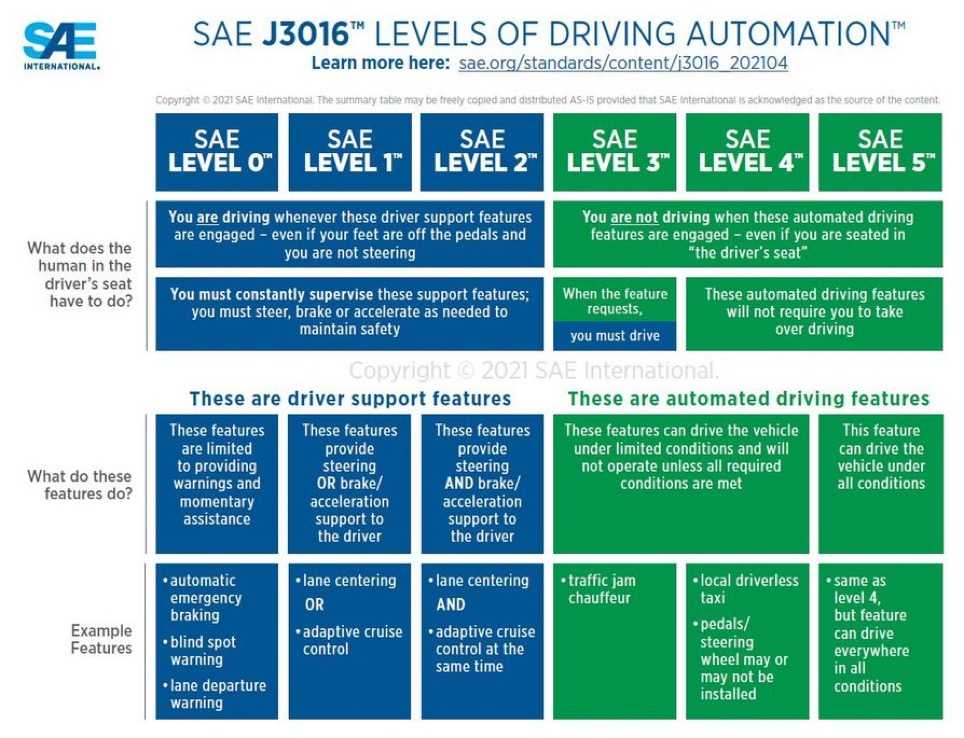

To understand self-driving cars, it’s better to know the 6 levels of driving automation.

The 6 levels of driving automation range from Level 0 (fully manual) to Level 5 (fully autonomous), as defined by the Society of Automotive Engineers (SAE). These levels have been adopted by the U.S. Department of Transportation.

While Level 0 means no driving automation, Level 1 is the level most people drive today. It includes driver assistance technologies such as cruise control or lane departure warning.

At Level 2, vehicles can operate autonomously with complex functions such as acceleration, steering, and braking, but the driver should still be in control.

Level 2 automation includes Ford BlueCruise, Tesla Autopilot, and GM Super Cruise™.

Level 3 vehicles have conditional self-driving capabilities, such as monitoring their surroundings and changing lanes. Although not required to drive, the driver must still be in the driver’s seat and ready to take over at any time.

Level 4 automation will reduce driver involvement to the point where it will be possible to work on a laptop or watch a movie.

Vehicles from Cruise, a subsidiary of General Motors, and Waymo, a spin-off of Google, are examples of Level 4 autonomy.

Back to Tesla’s FSD, although it’s called “full self-driving,” it doesn’t mean the Tesla can drive completely by itself because it can’t drive self-driving for long periods of time.

Full Self-Driving does not relieve the Tesla driver of responsibility for the safe operation of the vehicle. So, the Tesla driver must remain alert in the driver’s seat and be ready to take over if the FSD system responds inappropriately.

Tesla’s driver assistance system is currently considered a Level 2 setup according to the SAE Levels of Driving Automation table, as the driver is always in charge.

The highest level of autonomous driving is Level 5. Vehicles at this level can operate completely autonomously in any situation without any human input.

Some car companies are testing Level 5 self-driving cars, but fully self-driving cars have not yet been introduced to the public.

From level 2 and above, the self-driving system is powered by Artificial Intelligence (AI).

The fundamental mechanism of the AI systems that drive autonomous vehicle (AV) technology is the algorithm. Algorithm development begins with the collection of large amounts of high-quality, labeled physical data. This data is used to train, test, and validate the algorithms.

Continuous enrichment and refinement of algorithm training data is essential to maintain the momentum of AV technology development and advancement.

“To achieve robust and reliable AI systems, it is important that the training data accurately represents the richness and diversity of the real world,” says Ben Alpert, director of engineering at Nauto. “For example, the dataset should represent different environment conditions, including things like low light or glare.”[4]

3. A typical case study of self-driving cars

The following case study will help to understand more about self-driving cars.

This case is published with permission from maadaa.ai, a company dedicated to providing professional, agile, and secure data products and services to the global AI industry.

Client:

a leading international autonomous vehicle company

Background:

An international automotive company, known as a leading car manufacturer, is interested in technological advancements to meet increasing consumer demand for high-tech and convenience. They see the potential of automated driving for passenger cars and want to be at the forefront of this feature.

Challenge:

While this company has made initial breakthroughs in automated driving research and development, it recognizes the importance of data. Especially with varying road and traffic conditions, they need extensive and specific data to ensure the robustness of their algorithms. With a current accuracy rate of 95% under varying road and traffic conditions, they require extensive and specific data to improve their algorithms.

Key challenges include:

- Accurately identifying complex traffic signs and signals.

- Pedestrian and non-motorized vehicle recognition under diverse weather conditions with a current accuracy of 92%.

- Driving strategies across different urban and rural roads.

Solution:

Addressing this Company’s requirements, we offered a comprehensive data collection and annotation solution:

We collected driving data from over 10,000 hours of driving, ranging from metropolitan areas to villages, from rainy to snowy conditions, and from day to night.

Our annotation team meticulously labeled more than 5 million objects, covering traffic signs, signals, pedestrians, non-motorized vehicles, and obstacles, ensuring a 99.8% accuracy rate for each label.

Specifically:

- Road Relations: We have annotated obscured road relations to ensure precise driving in complex scenarios.

- Lane Labels: Added relevant tags to lane masks, ensuring the vehicles interpret lane information correctly.

- Sign Relations: Delved into annotating relations between signs and their corresponding roads or lanes, assisting vehicles in accurately understanding traffic sign meanings.

In addition, for different road types and traffic conditions, driving strategy suggestions were also provided to the car company.

Result:

By relying on maadaa’s data solutions, this customer not only receives high-quality training data but also the support and guidance of vetted engineers when it’s needed most.

To find out more information about the autonomous Driving Dataset from maadaa.ai, please click: