Upper Eyelid Segmentation Dataset

The progress of video and photo editing applications relies heavily on high-quality datasets for machine learning models training. Our carefully selected datasets play a crucial role in enhancing the abilities of such applications, by offering accurate segmentation and recognition of different elements within images and videos.

The "Upper Eyelid Segmentation Dataset" is designed for the beauty and visual entertainment industries, incorporating internet-collected images with resolutions from 100 x 100 to 400 x 400 pixels. This focused dataset is dedicated to semantic segmentation of the upper eyelid, with annotations covering both eyes, facilitating detailed eye makeup applications and character modeling.

Sample

Specification

Dataset ID

MD-Image-032

Dataset Name

Upper Eyelid Segmentation Dataset

Data Type

Image

ImageVolume

About 2.4k

Data Collection

Internet collected images. Resolution ranges from 100*100 to 400*400

Annotation

Semantic Segmentation

Annotation Notes

Annotation includes both eyes.

Application Scenarios

Beauty;Visual Entertainment

Data Collections

With around 2.4k images, this dataset provides detailed segmentation of the upper eyelids, offering a specialized resource for applications requiring precise eye feature analysis. The annotations for both eyes support the development of personalized beauty solutions and the enhancement of eye expressions in digital characters, contributing to more realistic and engaging visual content.

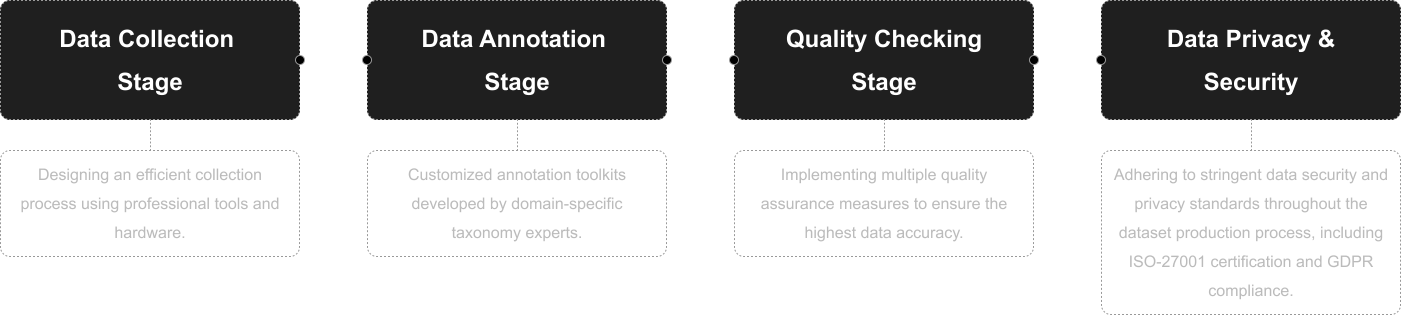

Quality Assurance

Relevant Open Datasets

To supplement this Dataset, you can explore these open datasets for additional resources:

FASSEG Repository [Learn more]

This collection offers datasets for frontal face segmentation (Frontal01 and Frontal02) and a dataset for faces in multiple poses (Multipose01).These datasets can be valuable for training models to perform face segmentation in different orientations and conditions.

Related products

Any further information, please contact us.