KEYWORDS: ChatGPT, LLM, GPT-4, GitHub, Dingtalk, data

Recently, New applications related to GPT are constantly being updated.

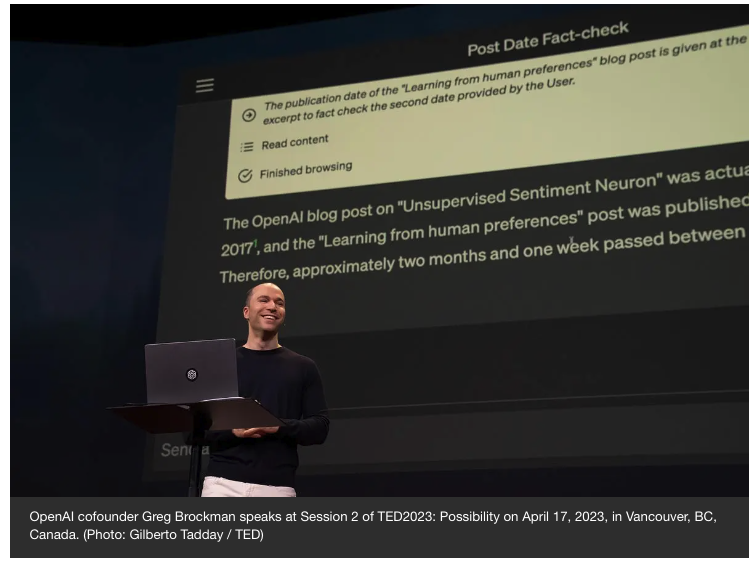

OpenAI’s co-founder Greg Brockman demonstrated ChatGPT’s new plug-in that can ask the chatbot automatically execute requests, such as creating a recipe, adding the corresponding groceries to an e-commerce app, and even drafting a tweet about it.

(Image Source: https://blog.ted.com/openai-cofounder-greg-brockman-demos-unreleased-chatgpt-plug-ins-live-at-ted2023/)

In addition, a new open-source application project based on GPT-4, AutoGPT went viral with 115k Starts on GitHub.

AutoGPT can perform tasks on its own without the user’s involvement at all, including daily event analysis, marketing plan writing, code programming, mathematical operations, etc.

Last month, Adobe released a video showing how to complete various tasks such as image generation, model building, image editing and graphic variants by entering relevant text, which has attracted enormous discussion.

Not to mention Github’s Copilot X and Microsoft’s Microsoft 365 Copilot, these AI tools help people to be more productive and make work easier and more efficient.

The cases above show that the gap between technology and application scenarios is suddenly vanishing.

1. Chinese Giants vs. ChatGPT: Challenge It or Embrace It?

A few months after the release of ChatGPT last December, the Chinese well-known Search Engine Company Baidu announced its release of “Ernie Bot”, which was highly expected to be the Chinese version of ChatGPT.

But the reality isn’t as fancy as it sounds. The day Ernie Bot was released, Baidu’s (09888. HK) share price had extended its decline to nearly 10%.

Chinese version ChatGPT — Baidu’s Ernie Bot?After Ernie Bot, more Chinese companies have announced that either they are working on their own LLMs or their new products are the “Chinese version of ChatGPT”.On 10th, April, another Chinese Search Engine Company, Sogou, its founder Wang Xiaochuan officially announced joining AI LLMs race by founding the company Baichuan Intelligence, and said: “to release a GPT3.5-like LLM by the end of this year.”

While some chose to produce a Chinese version of ChatGPT, others chose to focus on the usage of localized application scenarios.

Among them, the office scenario has become one of the priority scenarios for large models to be implemented by virtue of its ability to adapt to LLMs, the large number of users covered, and the high user payment rate.

One of the main reasons is that Microsoft 365 Copilot has proved its feasibility.

Since 2022, players in the Chinese coworking fields, especially the three major players, Ding Talk from Alibaba, WeCom from Tencent, and Lark Suite from ByteDance, which have risen rapidly during the Coronavirus period, gained enormous users.

So far, the AI tools mentioned above are most concentrated on office documents. Once you request, AI work can automatically generate relevant content, including writing outlines, stories, translations, continuation, content summaries, etc.

The reason that Chinese giant enterprises entered the battlefield of “AI Office Assistant” is that they all know very well that it means a new revenue growth engine.

2. Ding Talk: A well-deserved localized AI work assistant

Microsoft Office users can always achieve text generation, create PPT based on Word documents, and use Excel pivot tables and other functions with the help of Copilot.

However, an office product with powerful AI, while meeting Chinese workplace communication habits, still has a huge market.

So, the release of AI-driven Ding Talk is a remarkable step.

Ding Talk, an enterprise-level AI mobile office platform built by Alibaba Group, is based on its own LLM, Tongyi Qianwen. It is an AI product suited for multiple scenarios, such as office documents, meetings, application programming, group chats, etc.

Here are some examples to help you to know better what new DIng Talk can do.

2.1 It all starts with a “/”

In the document scenario, with Ding Talk, users simply enter “/” (also called magic wand) to achieve an outline of the promotion plan, the continuation of the article, expansion and other copywriting functions. It can also generate images, charts and diagrams based on prompt words.

In the group chat scenario, “/” can automatically generate chat summaries to help users quickly understand the context, as well as automatically generated to-do lists.

In the meeting scenario, video conferencing on Ding Talk can generate real-time subtitles, and automatically generate key summaries and to-do lists after the meeting by simply typing “/”.

Even in some offline meeting scenarios, people can also use Ding Talk to recognize the meeting content and achieve the automatic generation of summaries, to-do lists, etc.

2.2 Using one photo to generate a mini-App

The news that GPT-4 can generate a web page from a photo is still fresh in everyone’s mind. However, it seems that this function has not been open to the public yet.

But with Ding Talk, users can generate a mini-app by taking a photo and uploading a functional sketch without writing any code. Users can also modify the AI in multiple rounds of conversation afterward.

With the ability of Ding Talk’s AI-generated coding, the era of “everyone is a developer” will also be accelerated.

2.3 Personal Knowledge Bot

The knowledge bot summoned by “/” allows you to create an exclusive bot that learns automatically with one click.

During the creation process, the bot can automatically learn the content in a document, a web page, or a knowledge base link, and then intelligently generate conversational Q&A.

Through multiple rounds of interaction, you can also have the bot constantly learn new documents and automatically add and update Q&A content.

Sounds like a personal knowledge mini-language model. Right?

With the support of AI tools, people can also “master” the skills of multiple jobs — drawing, copywriting, translation, and even programming. The era of “each person is a team” is on the way.

3. LLMs vs. Scenario-based applications, where is the opportunity?

Ding Talk’s CEO Ye Jun announced that the plan for all scenarios will be AI-driven in the next year. He said, “We are also testing Ding Talk’s personal edition, Search function, Mailbox, AI Assistant, AI Customer Service and much more.”

On the same day as the release of the new Ding Talk, the Chinese well-known office software company Kingsoft Office released its AI application: WPS AI.

A few days later, Baidu announced that its LLM, Ernie Bot, is also fully applied to the internal AI work platform “Baidu Ruliu”.

The relationship between the underlying LLMs and the actual application scenarios has been verified several times.

It is not enough to have LLMs or the ability to call LLMs but also to be able to apply them to actual application scenarios which will bring you new opportunities for potential rapid business growth.

How companies can take advantage of such an opportunity to develop their exclusive AI products and services that have a deep understanding of their business and customers is an urgent question.

In this case, your company’s exclusive data is one of the core competencies which needs to be well-used and trained.

Based on years of technical accumulation in the field of data processing and annotation, maadaa.ai officially launched a specialized data service for the whole process of pre-training LLMs represented by ChatGPT.

maadaa.ai, your reliable AI partner to help you collect, process, and label your exclusive data, saving you time, and ensuring the accuracy of your training data.

Further reading:

Chinese version ChatGPT — Hype or Hope?

GPT-4 and beyond: the infinite possibilities of text-to-X

Chinese version ChatGPT — Baidu’s Ernie Bot?

ChatGPT for Enterprise Scenarios — How to cope with the data challenges